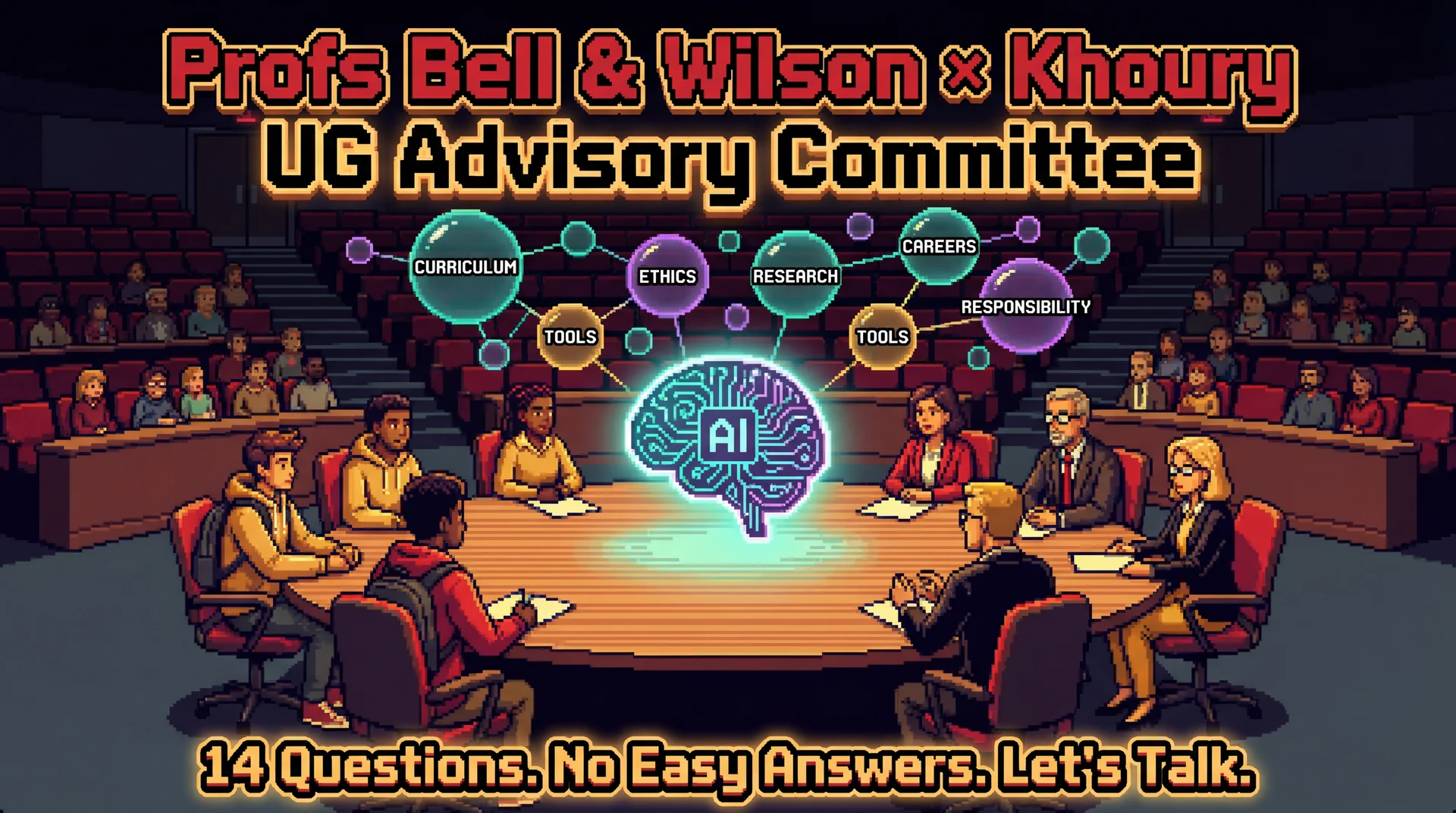

An Undergraduate Student & Faculty Conversation

Prof. Jonathan Bell & Associate Dean Christo Wilson

Organized by the Khoury UG Advisory Committee

©2026 Jonathan Bell, CC-BY-SA

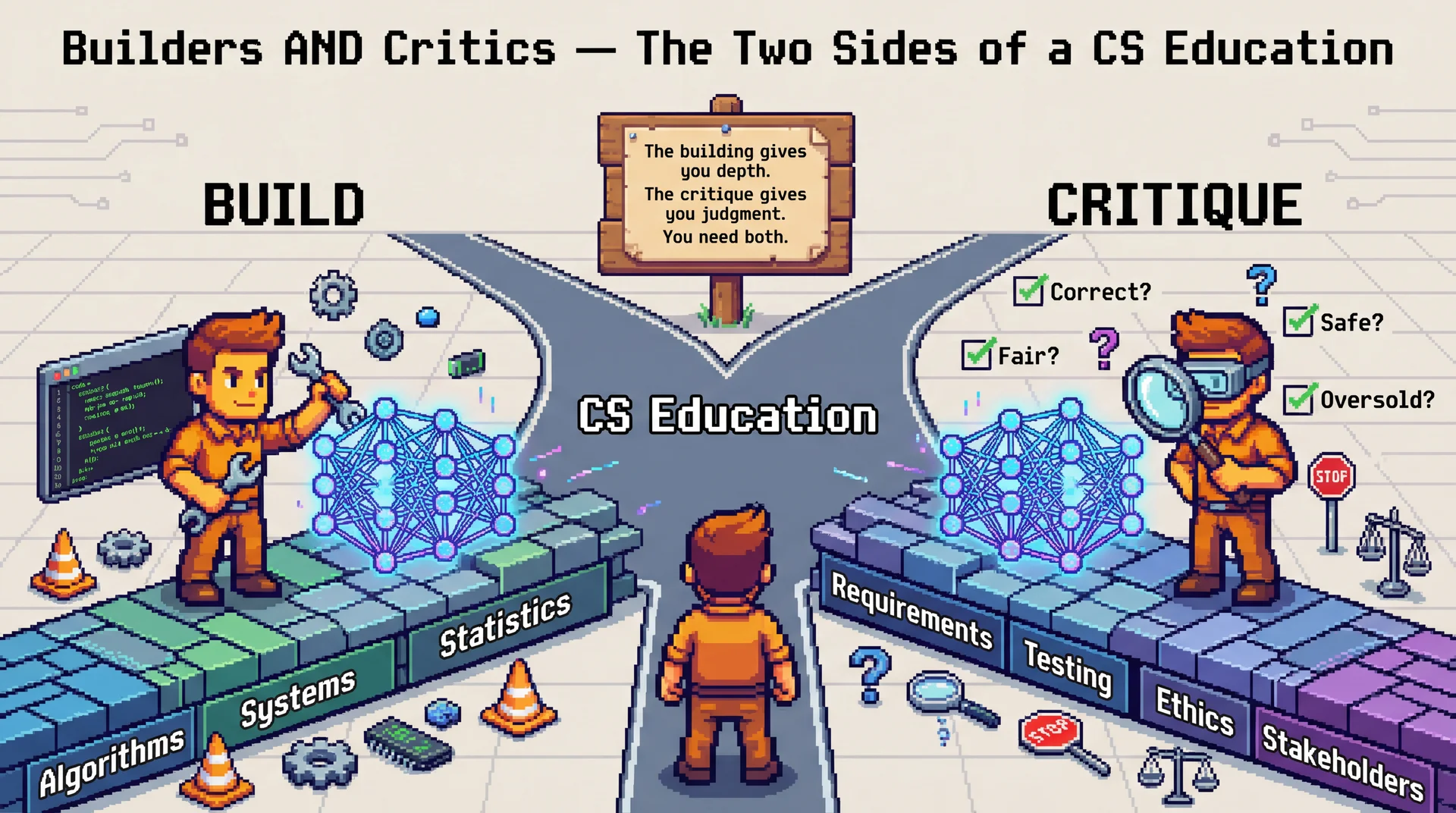

Q1: What Is Khoury's Role in AI?

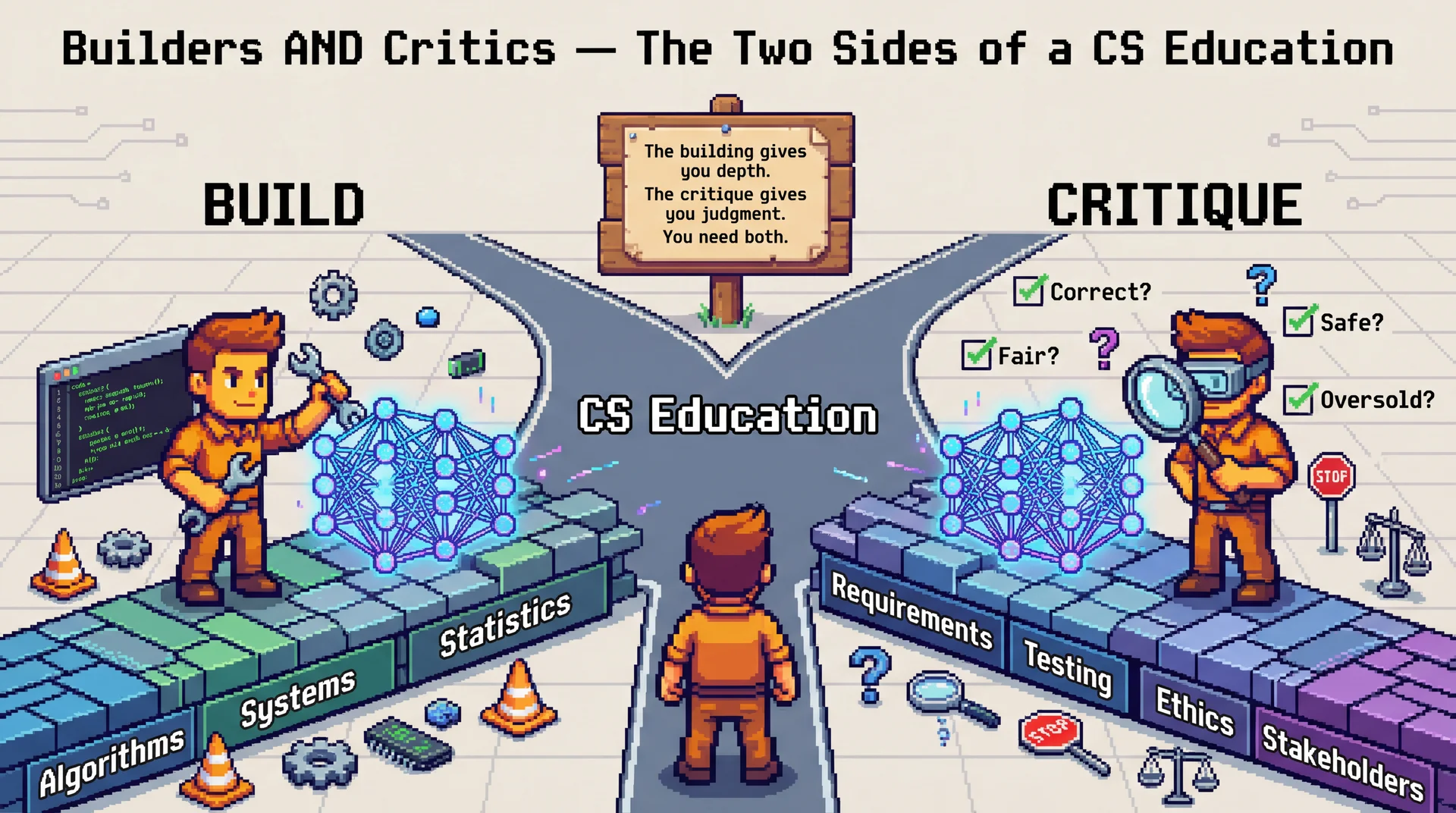

Are we primarily builders of AI systems, critics and auditors of them, or both?

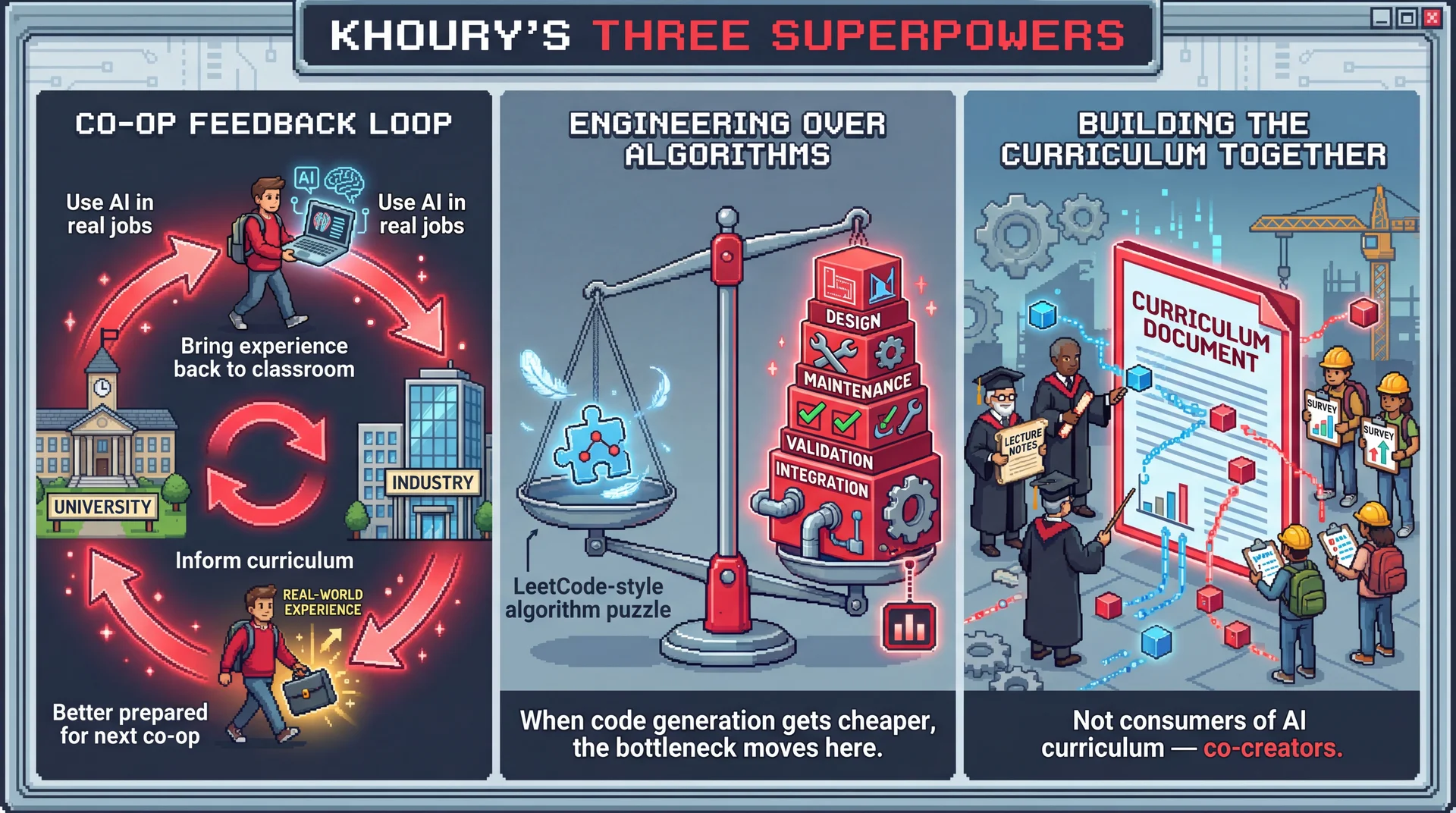

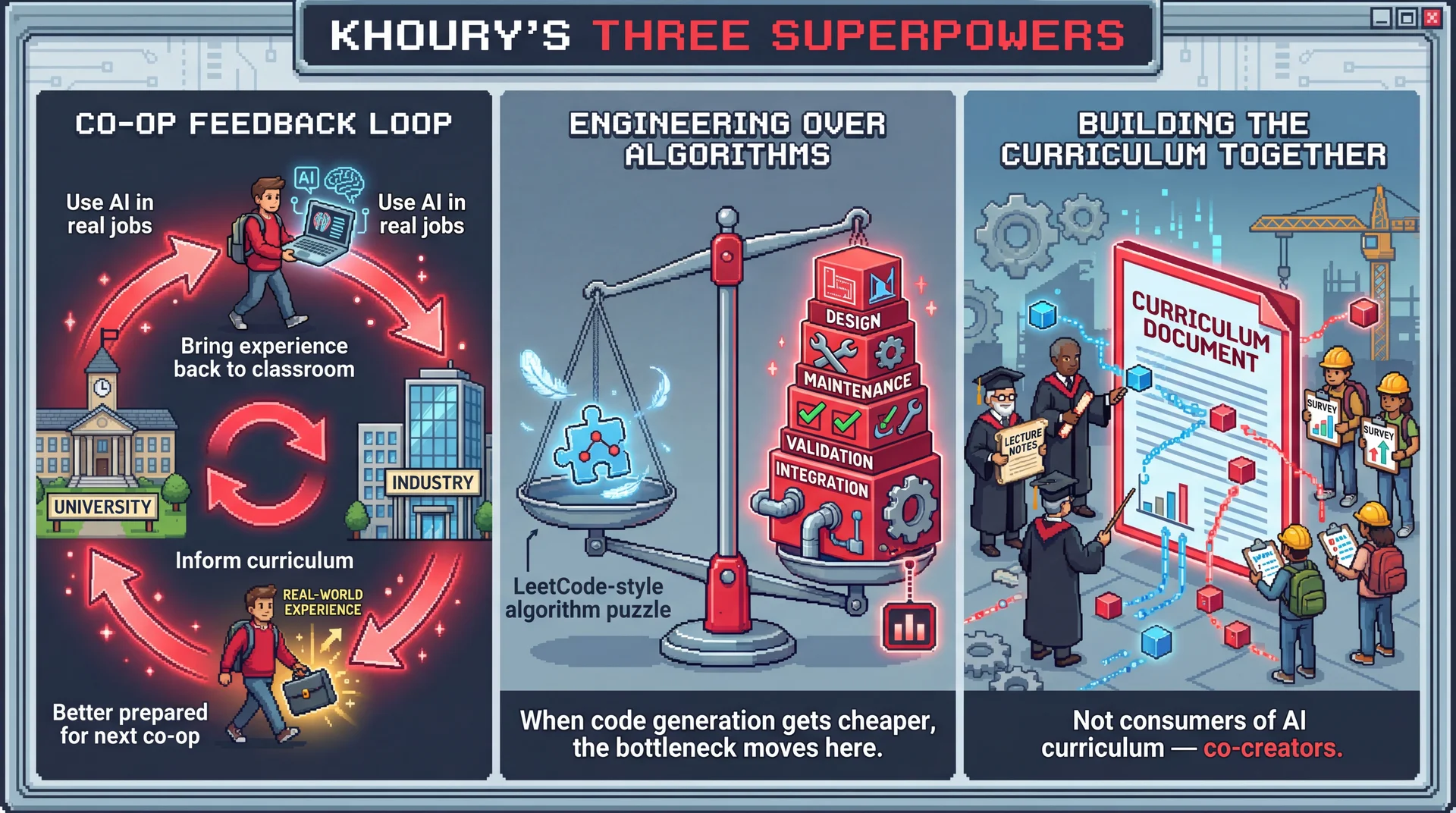

Q2: What Differentiates Khoury's AI Education?

What do we uniquely offer students that they couldn't get elsewhere?

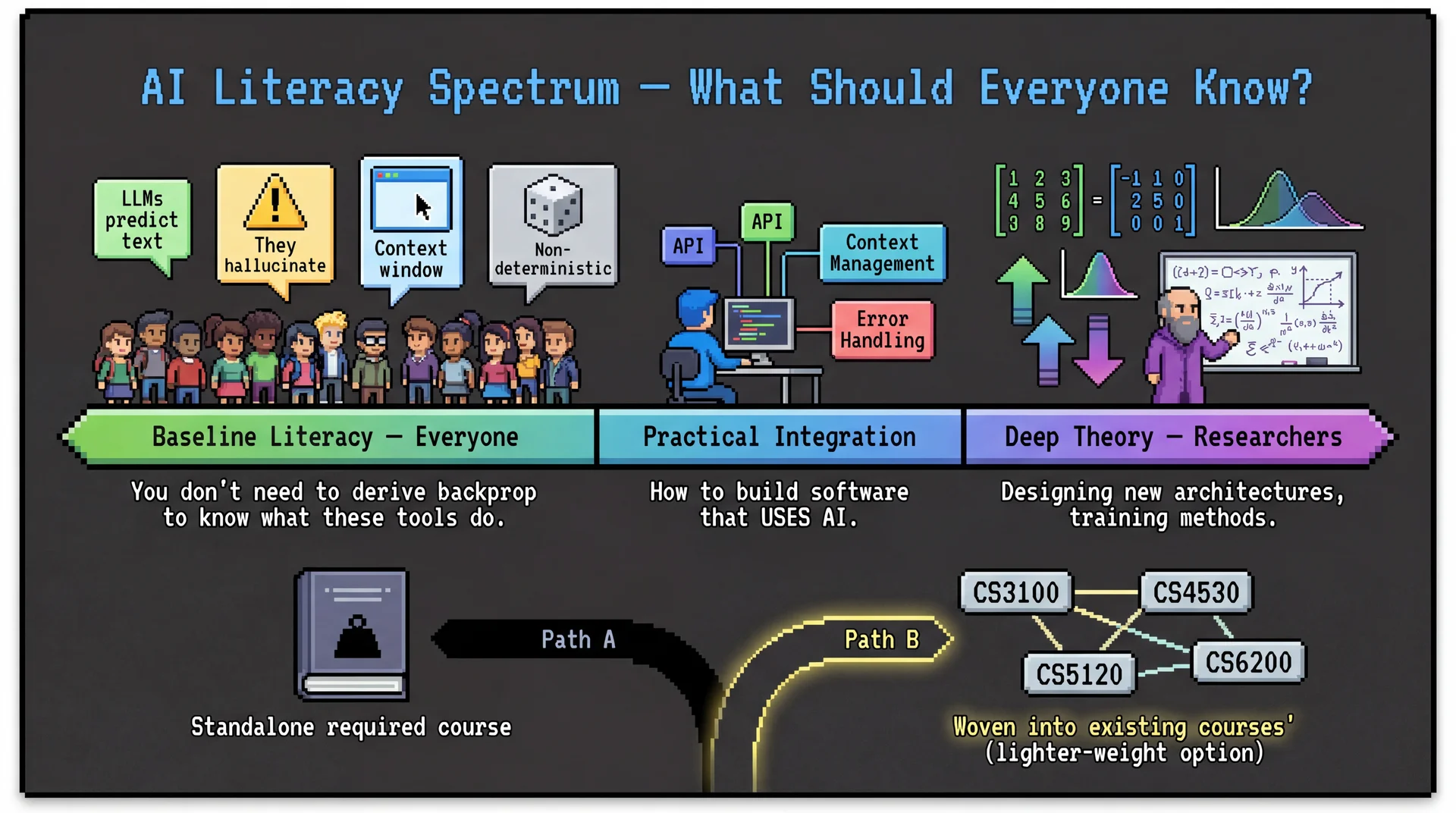

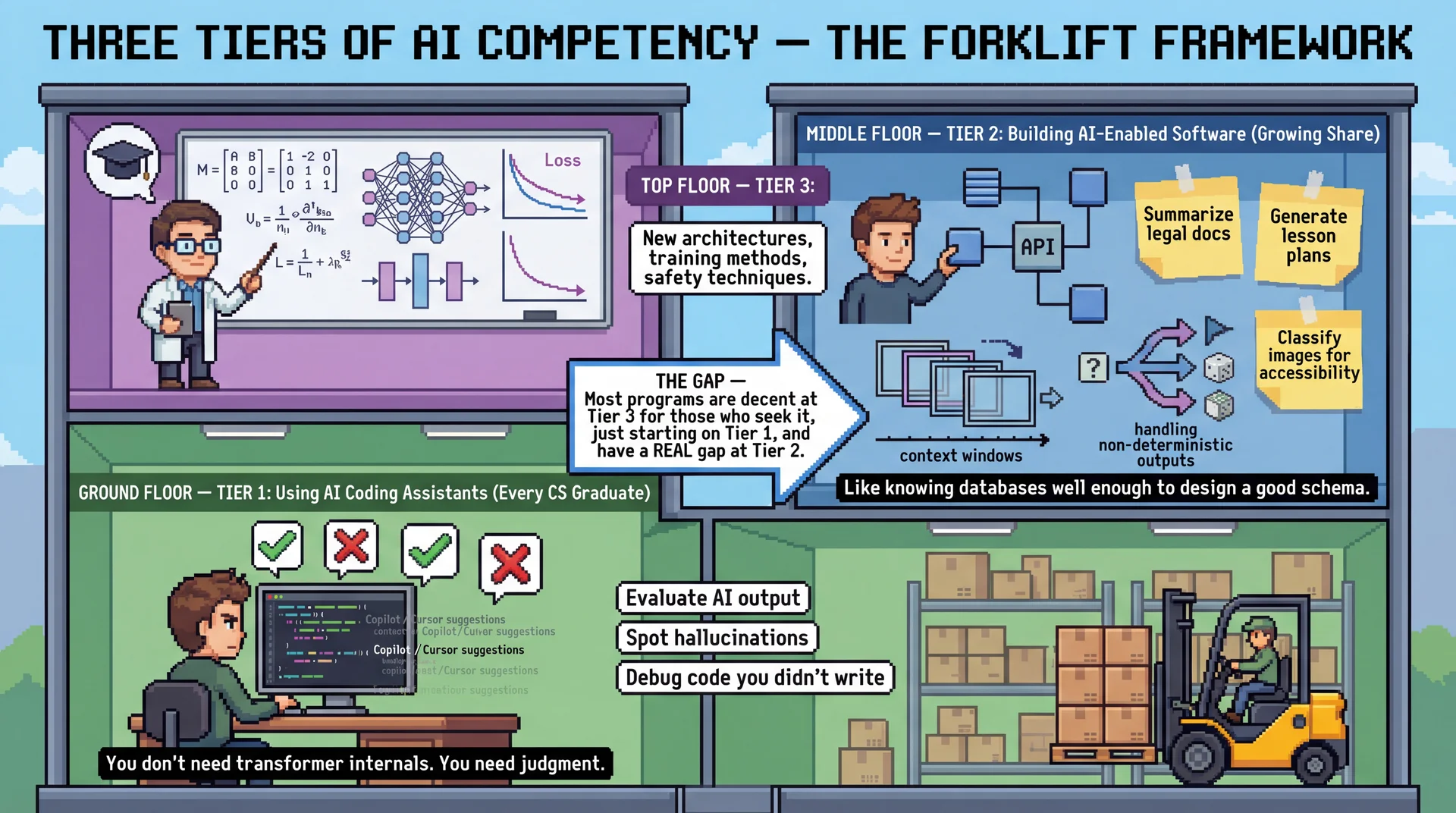

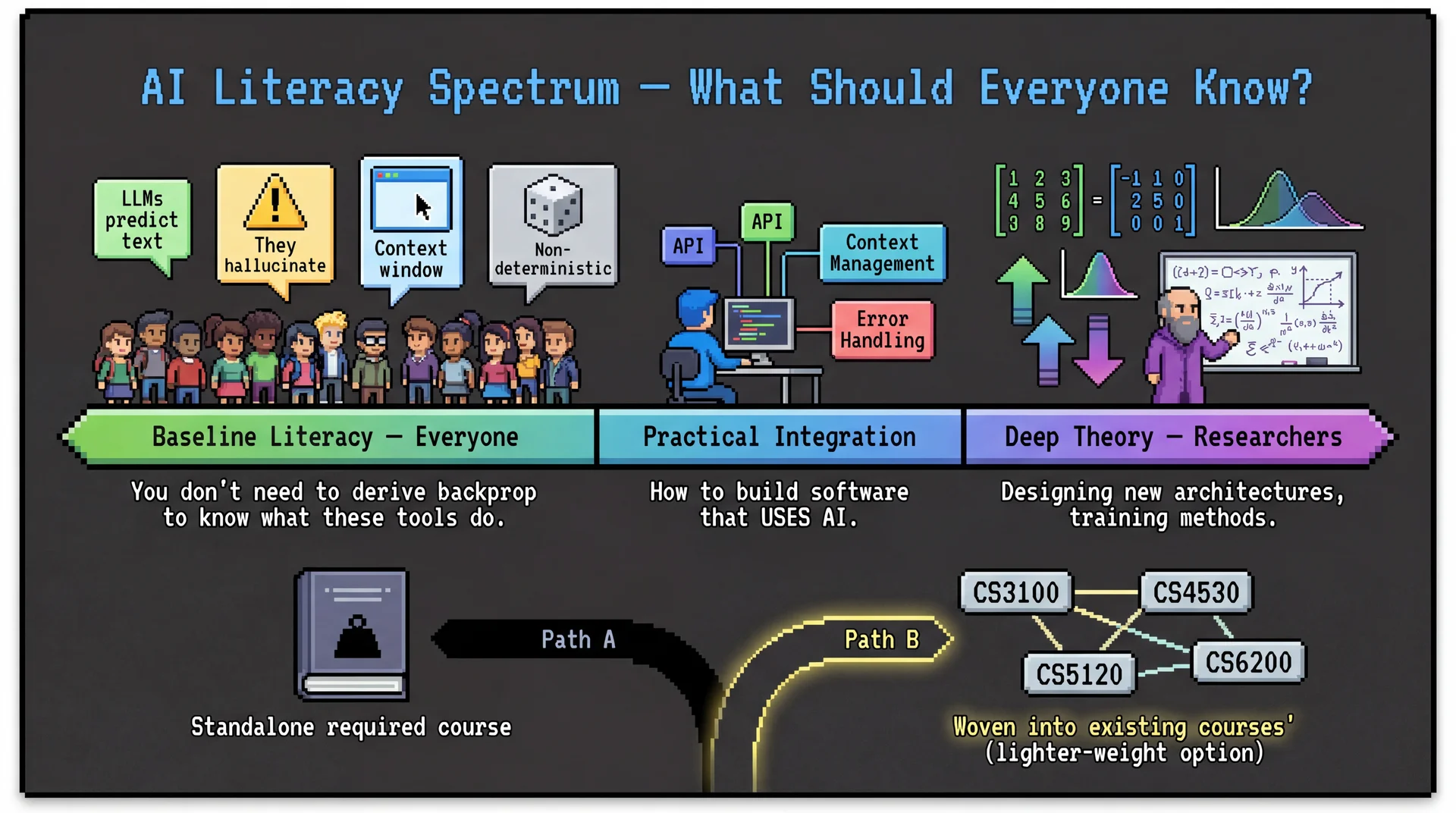

Q3: Should AI/ML Be Required for All CS Majors?

If AI is becoming foundational, does it belong alongside systems and algorithms as core knowledge?

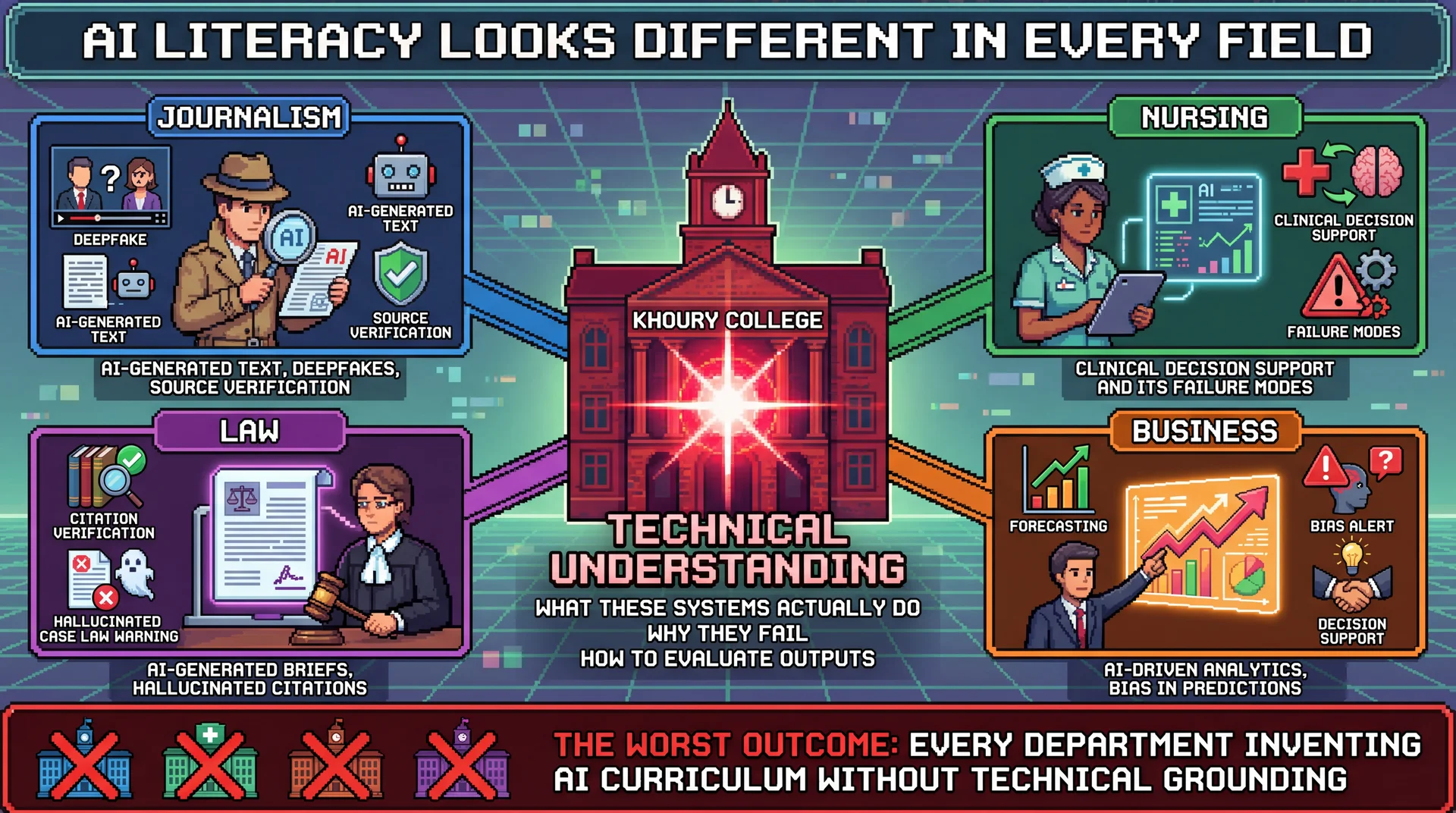

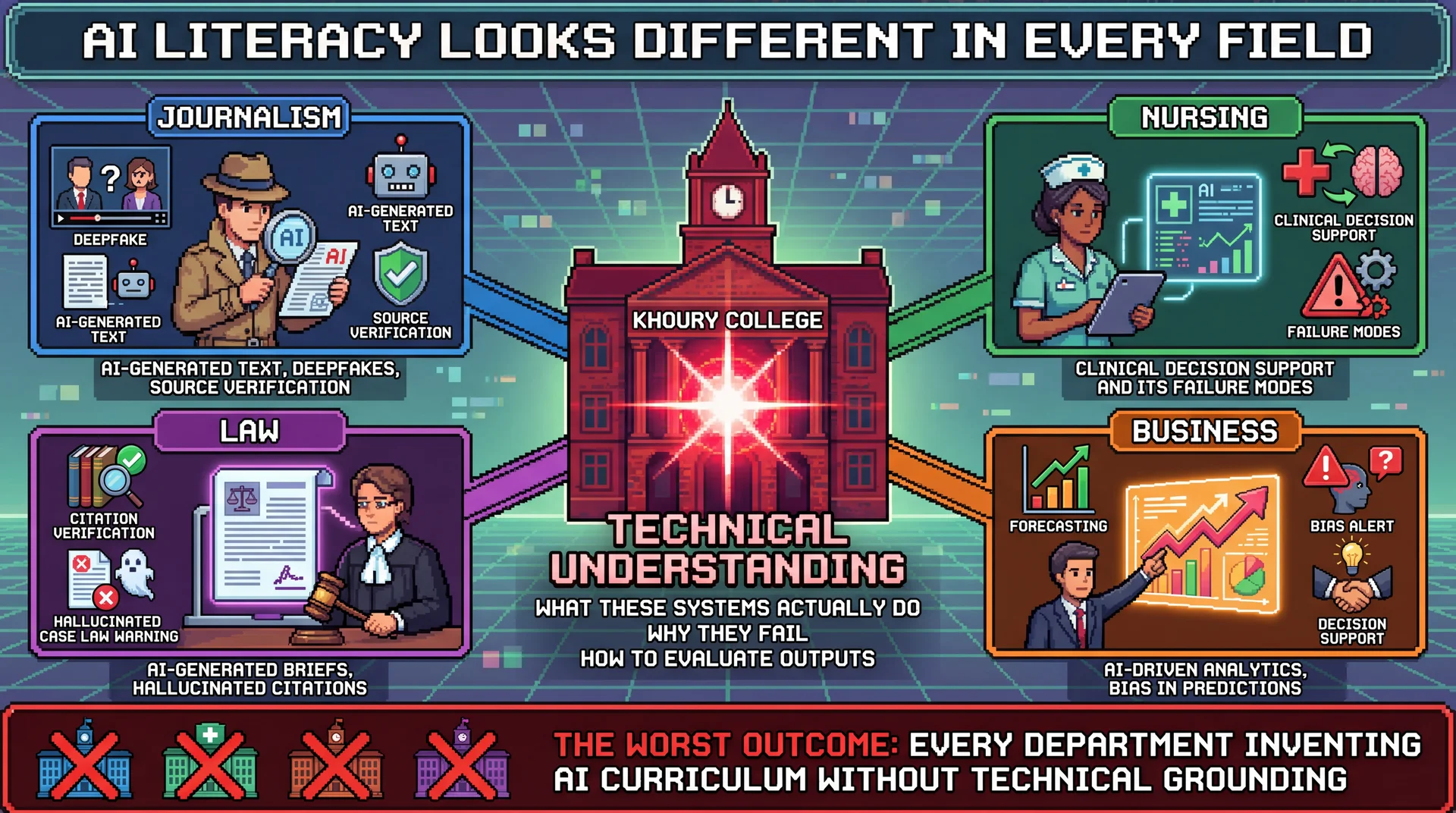

Q4: Should All University Majors Get AI Training?

Should there be required foundational AI courses beyond Khoury?

Should students first learn how transformers work, or how to effectively use them?

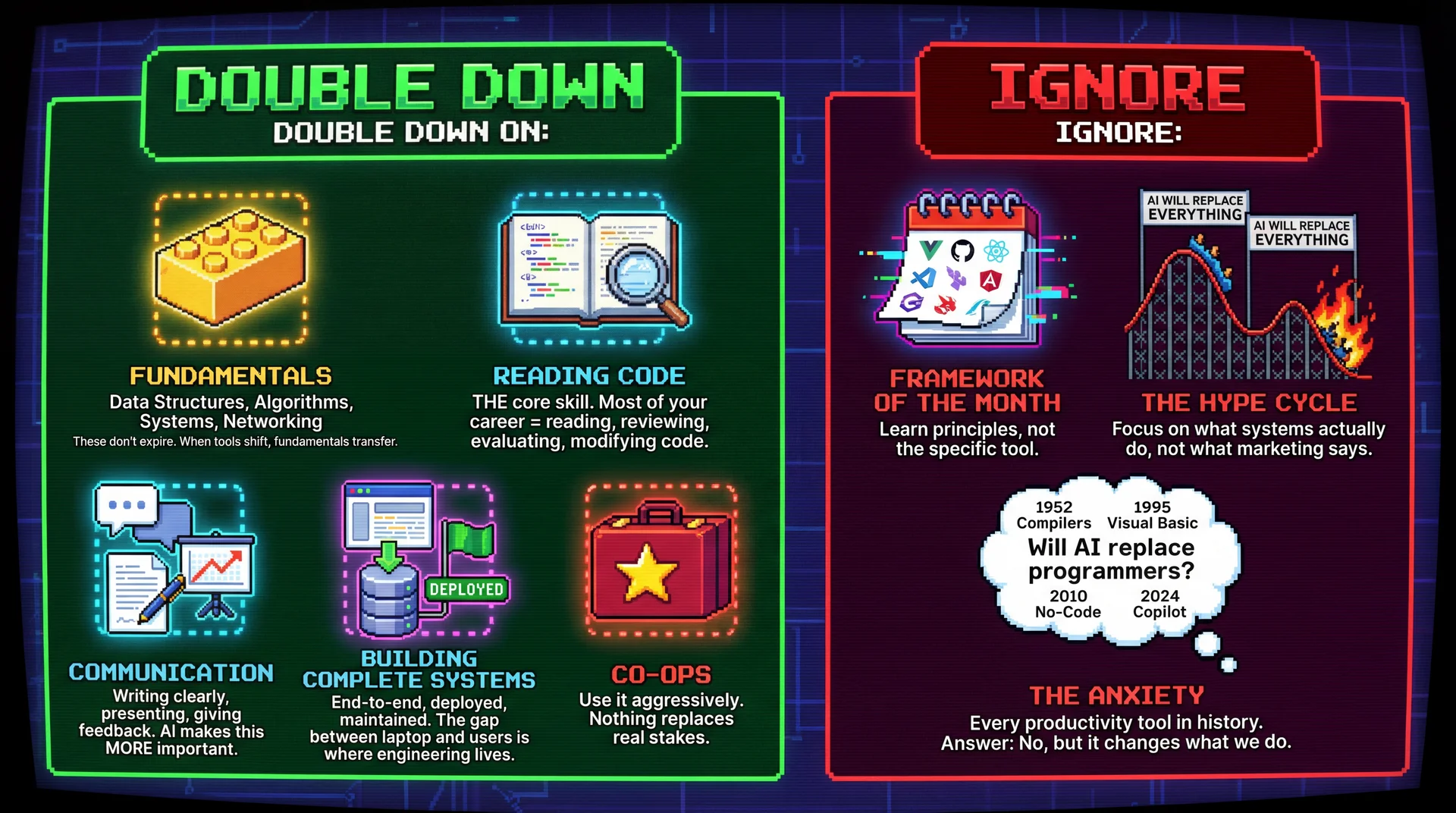

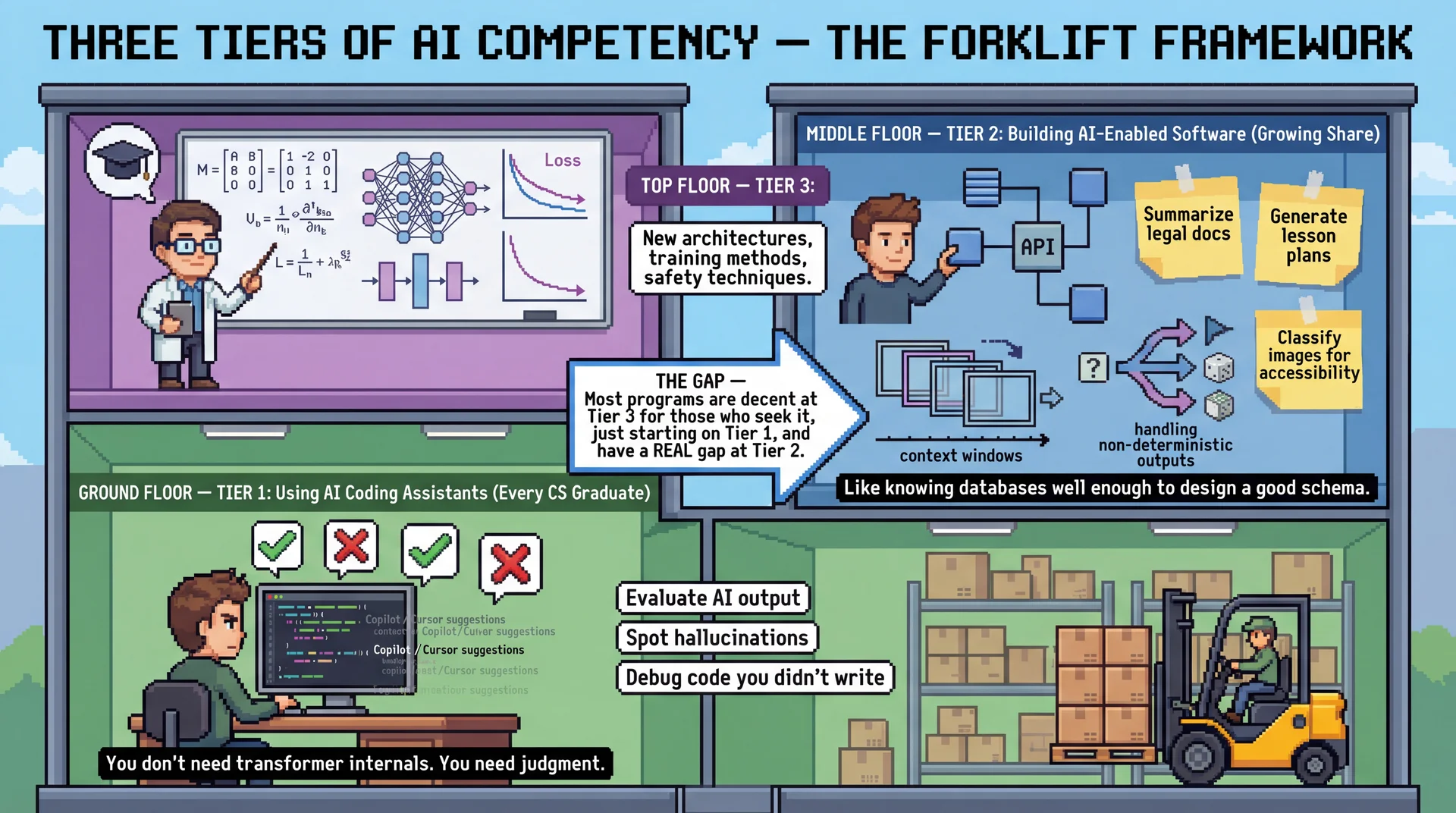

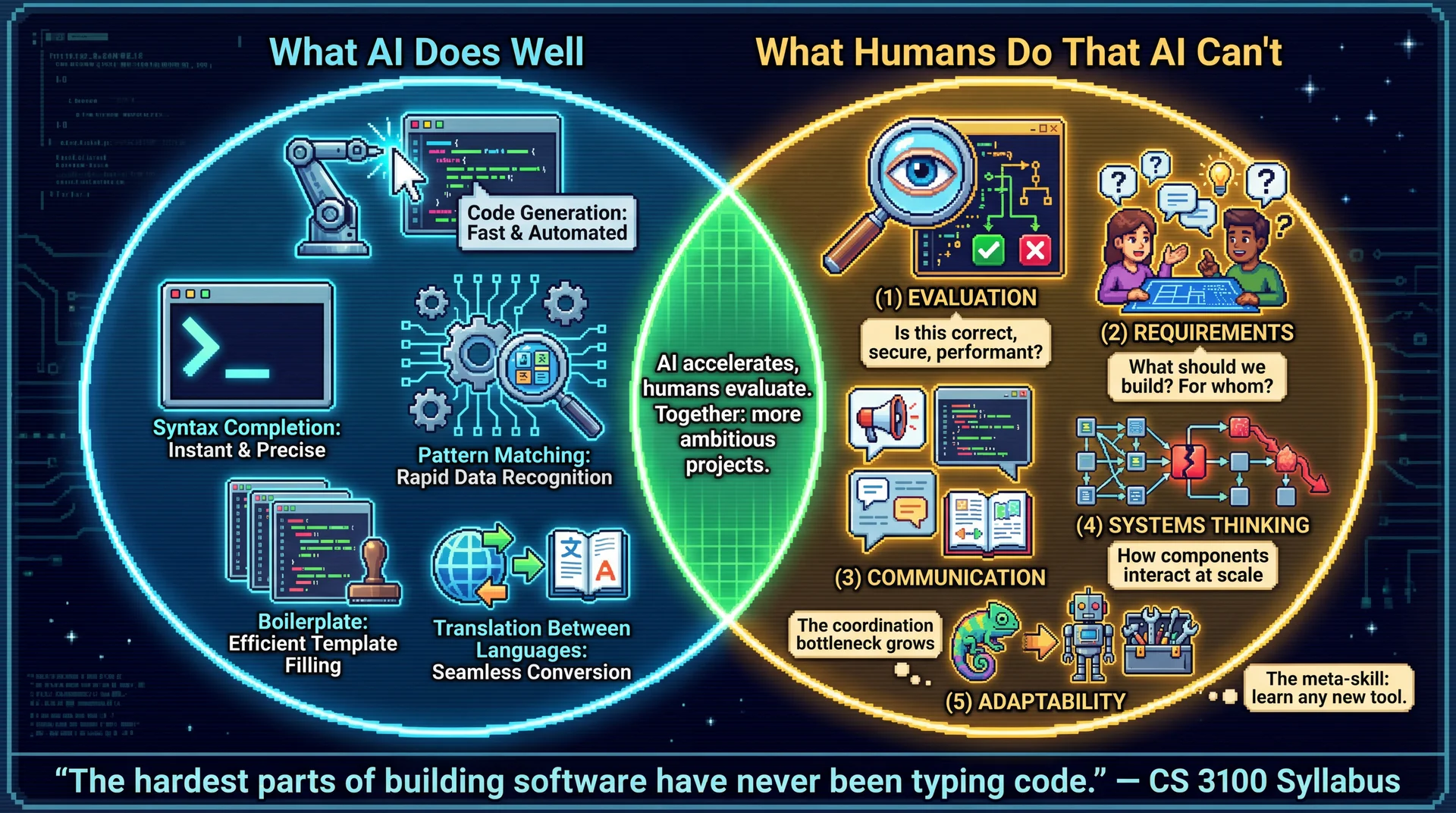

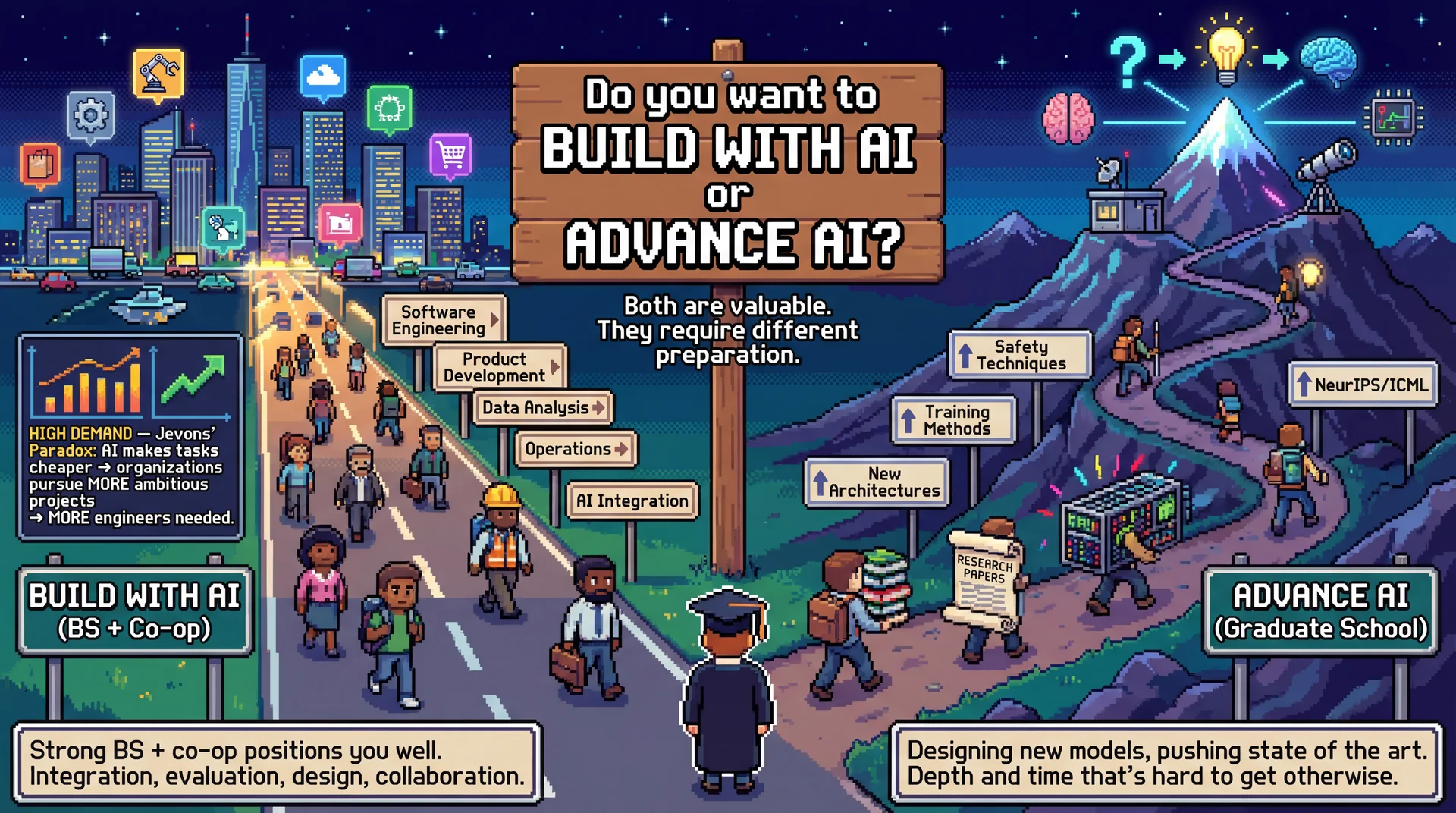

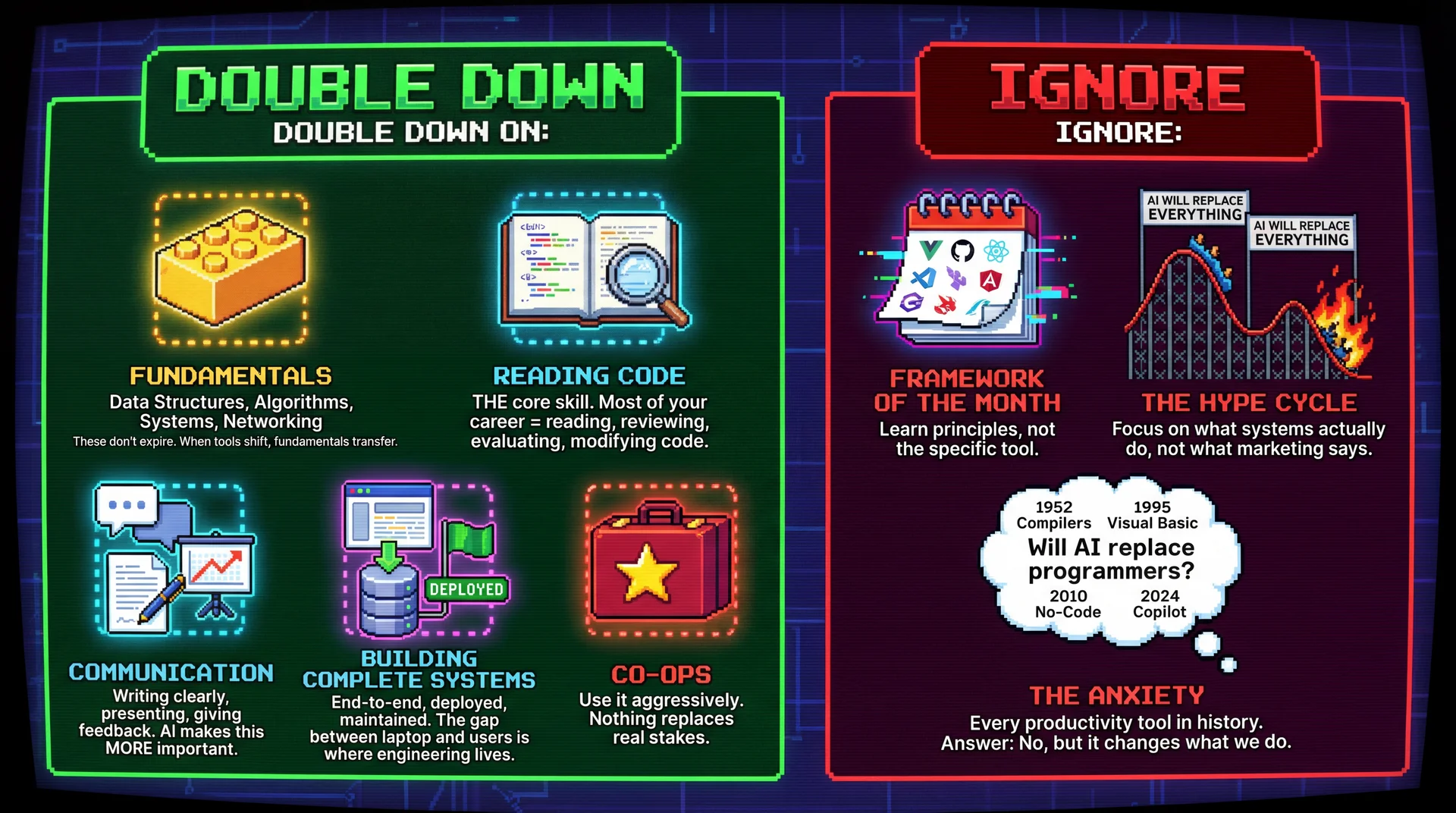

Q6: What Skills Matter Most in an AI-Driven Workforce?

Math depth? Systems thinking? Product intuition? Ethics? Something else?

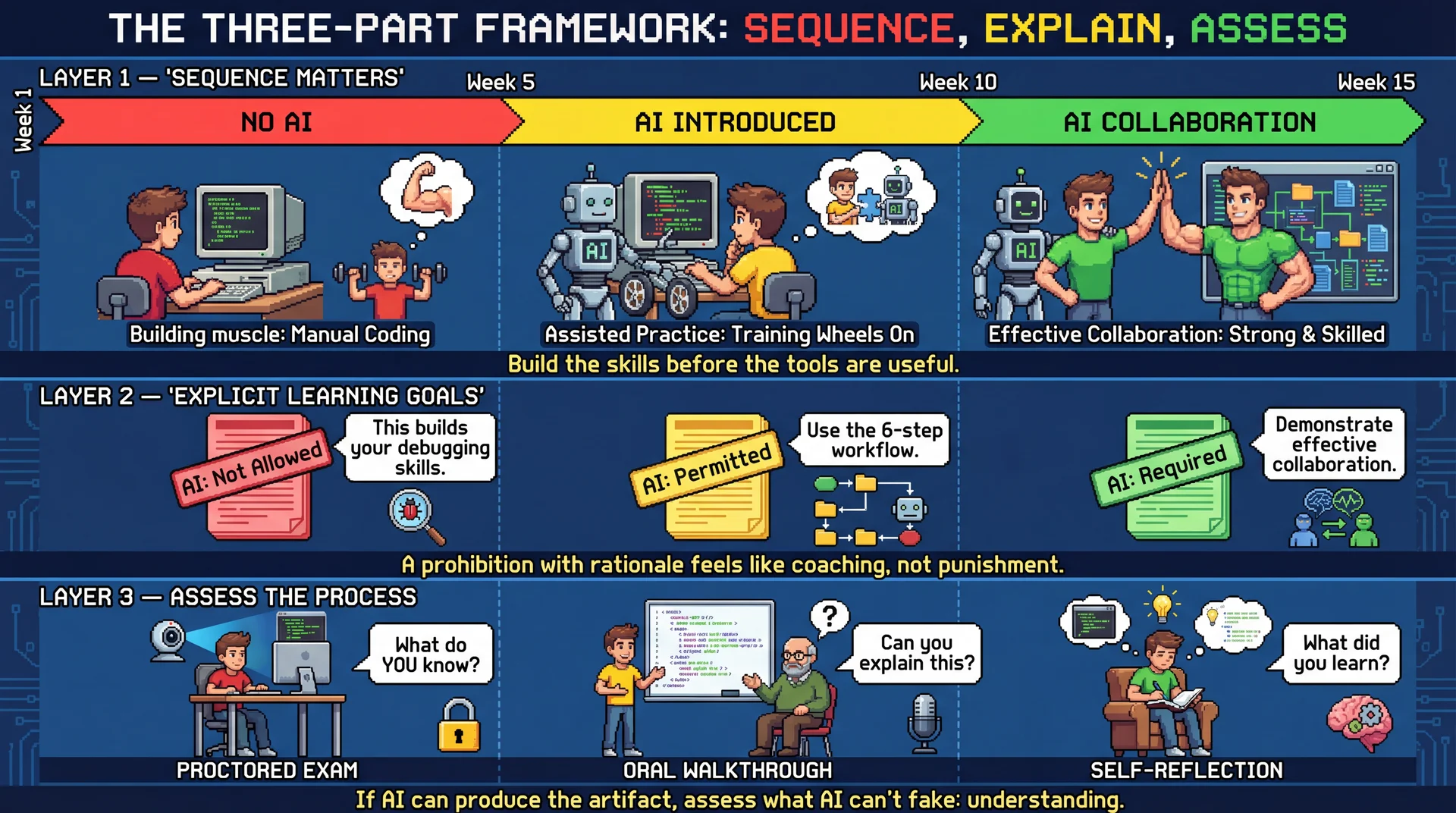

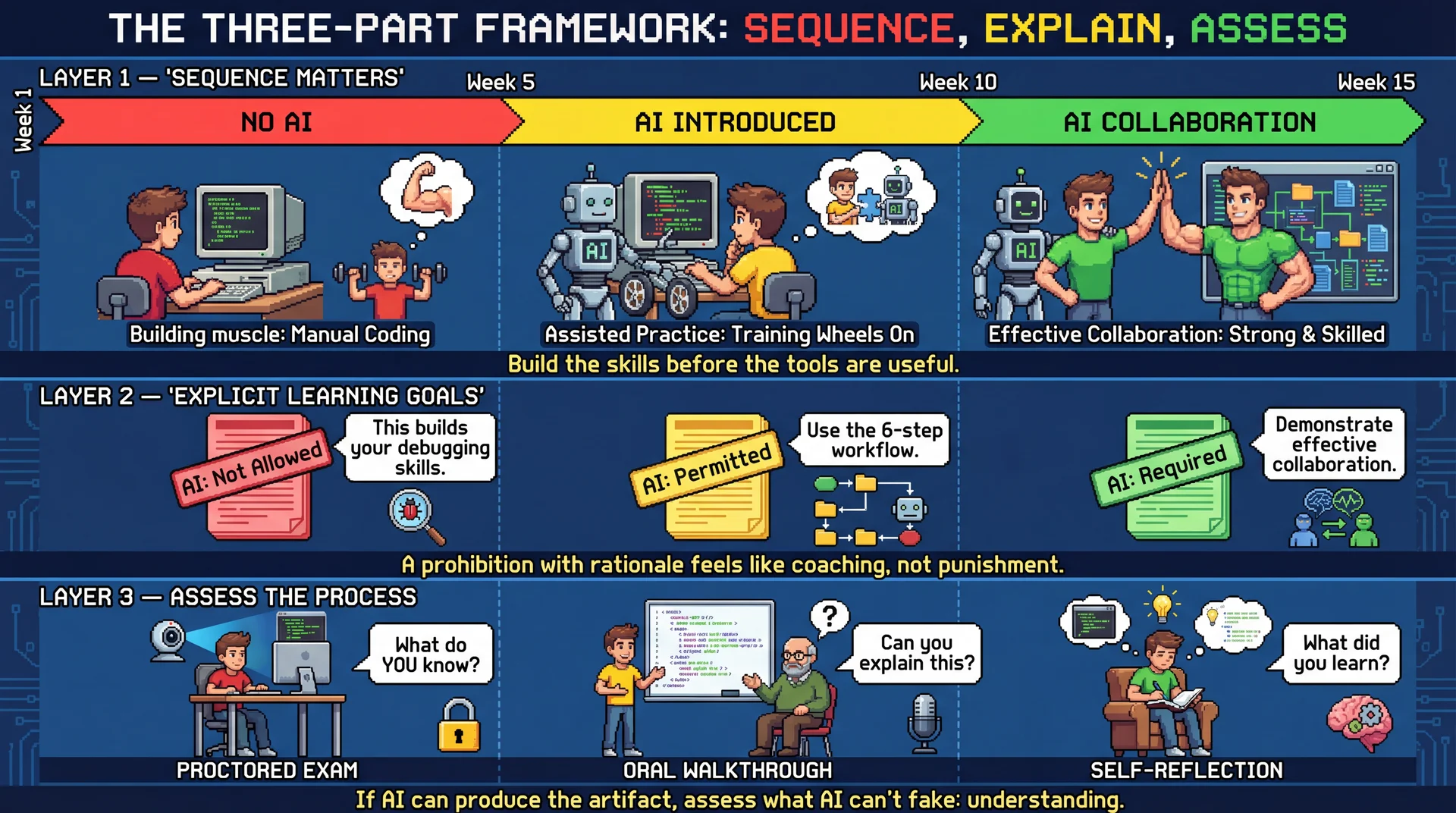

Where should the line be drawn in coursework?

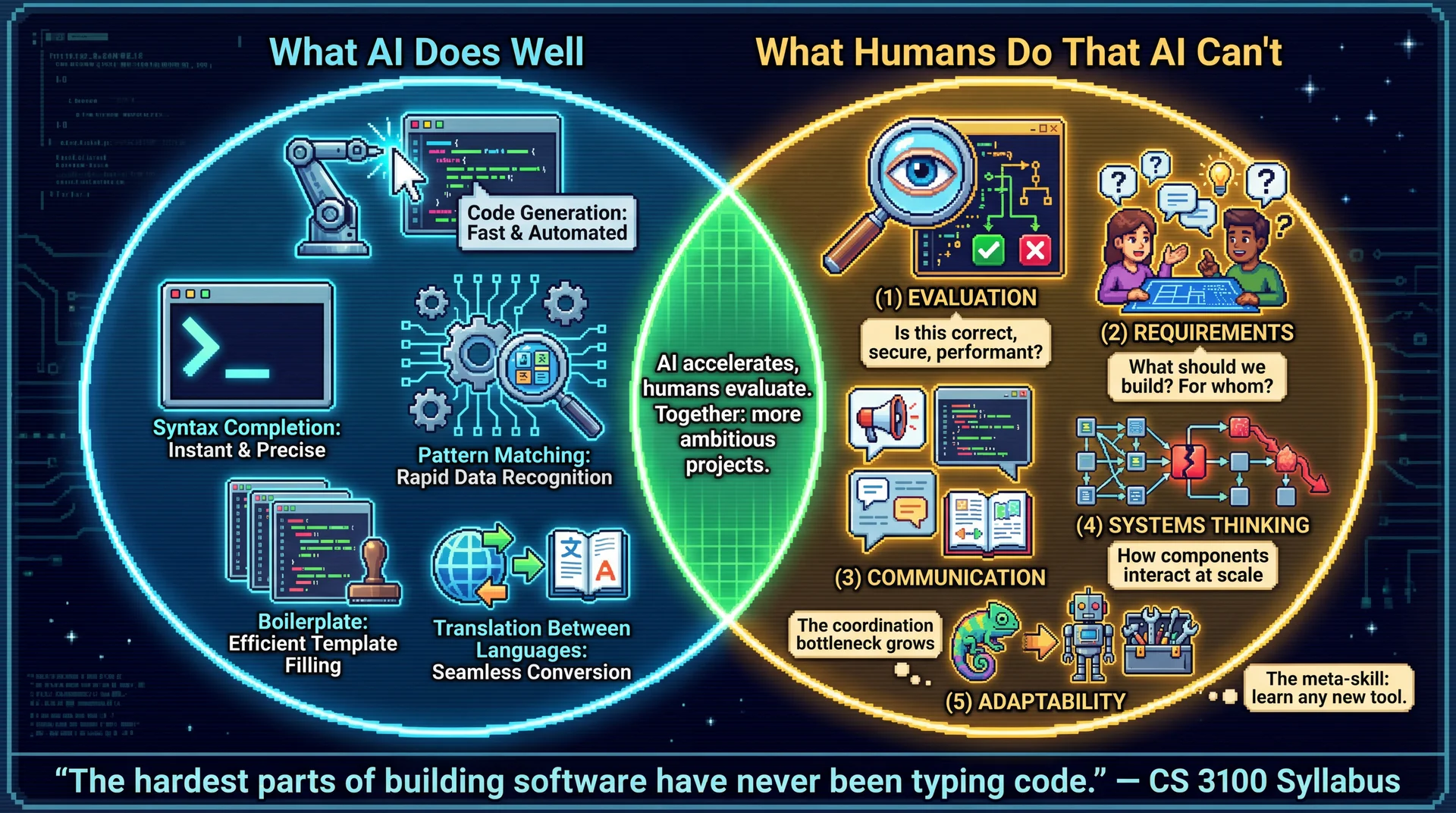

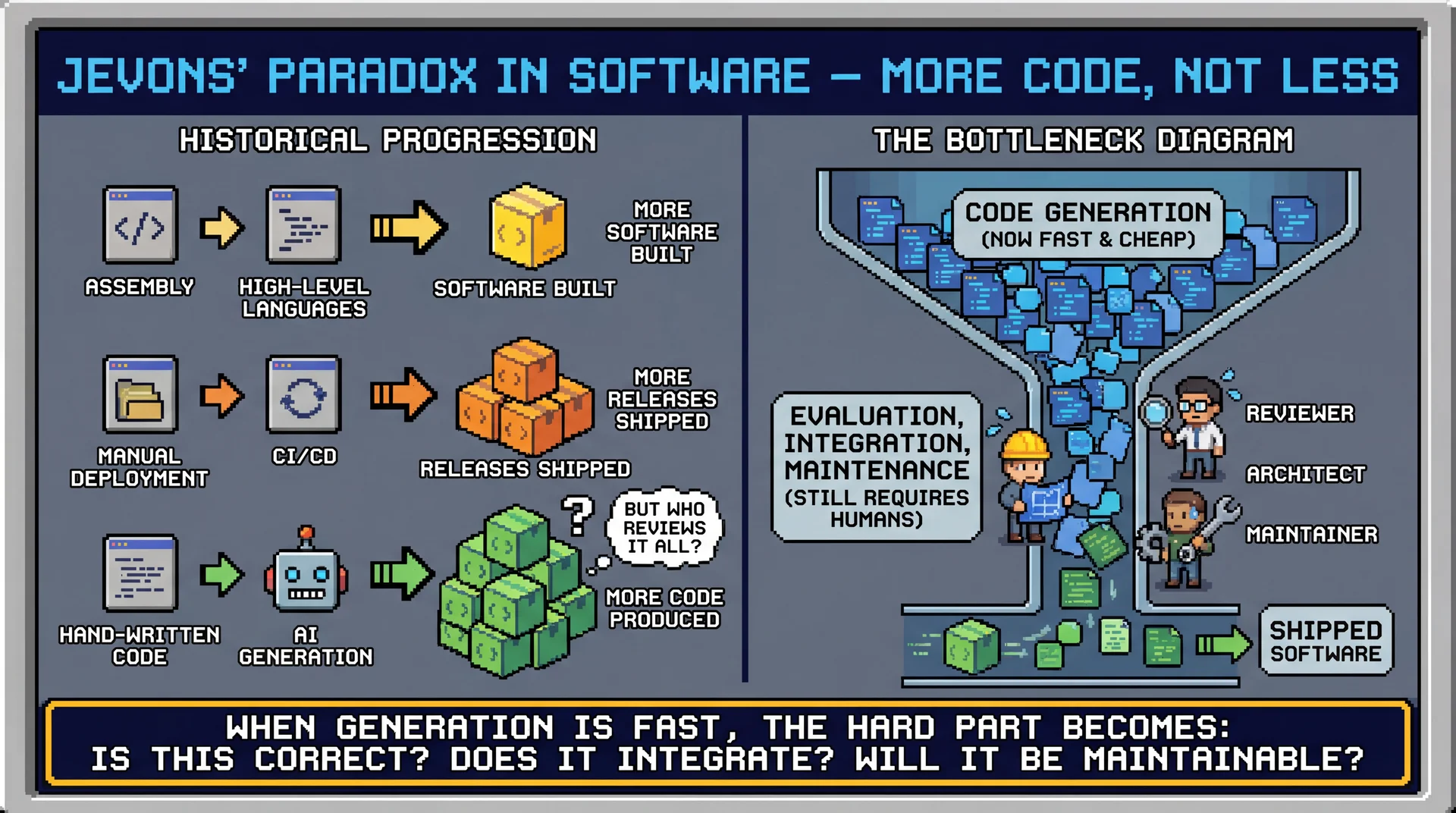

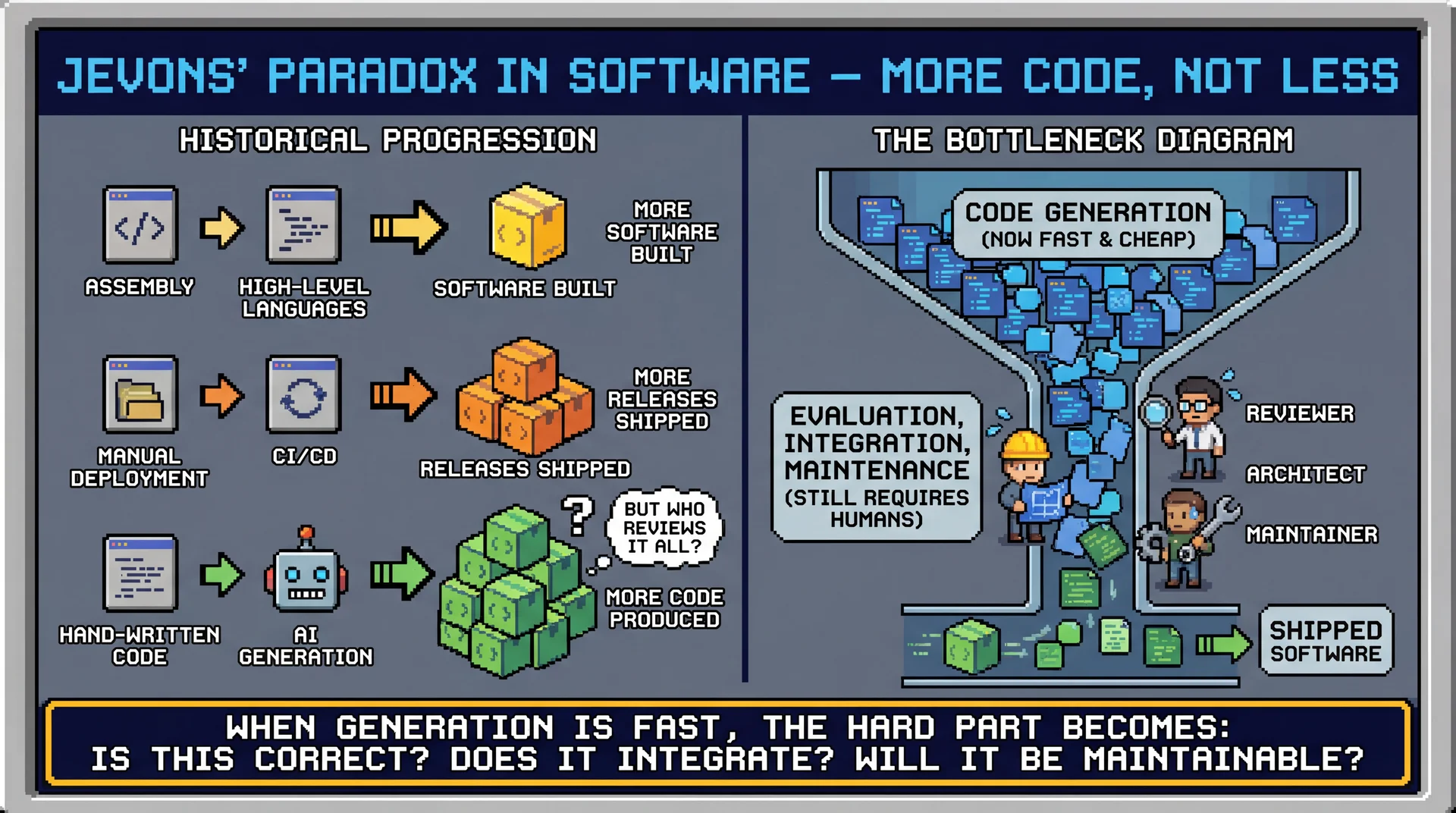

Q8: How Is AI Changing Software Engineering?

Are we training students for a future of less writing and more reviewing?

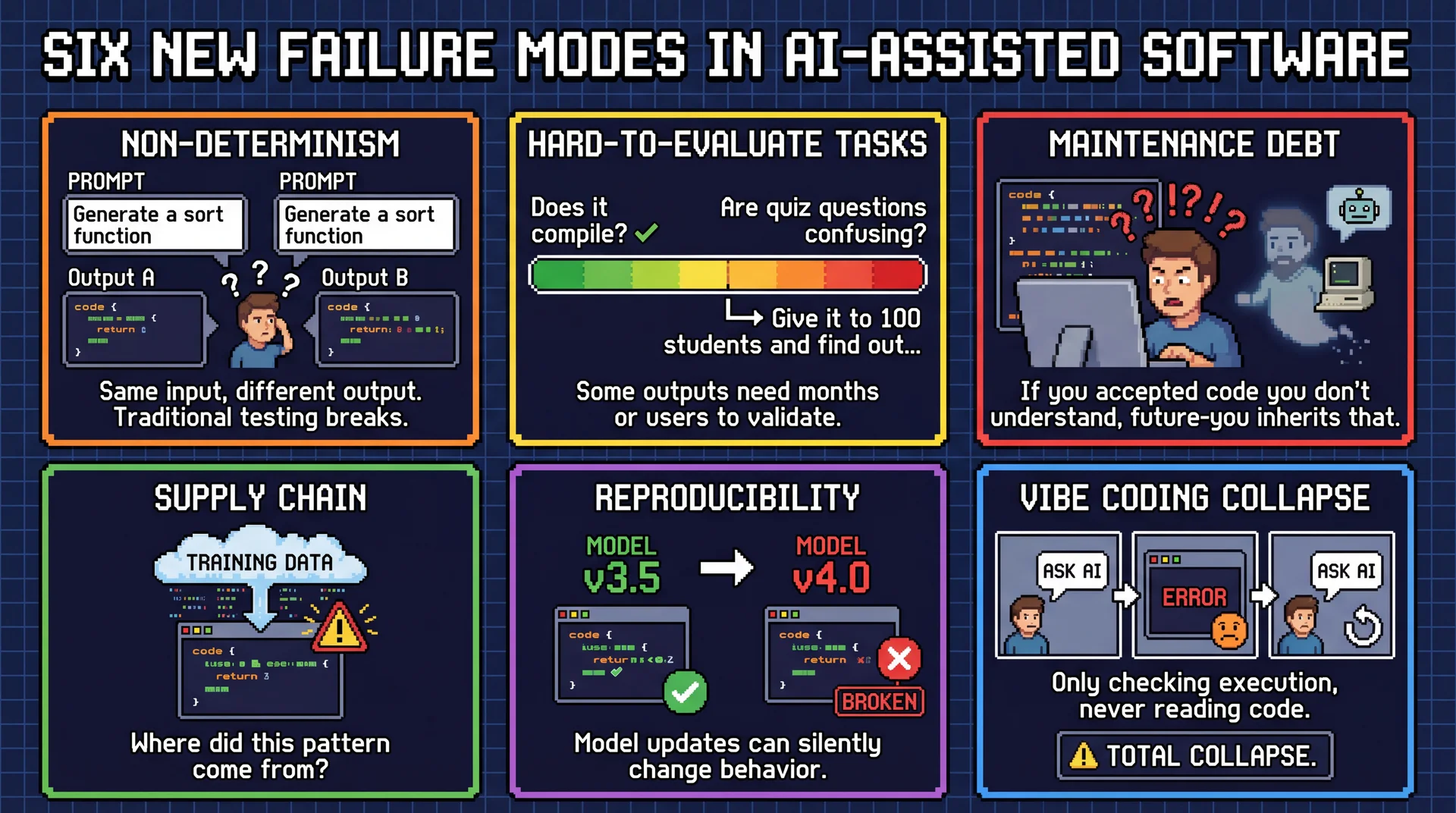

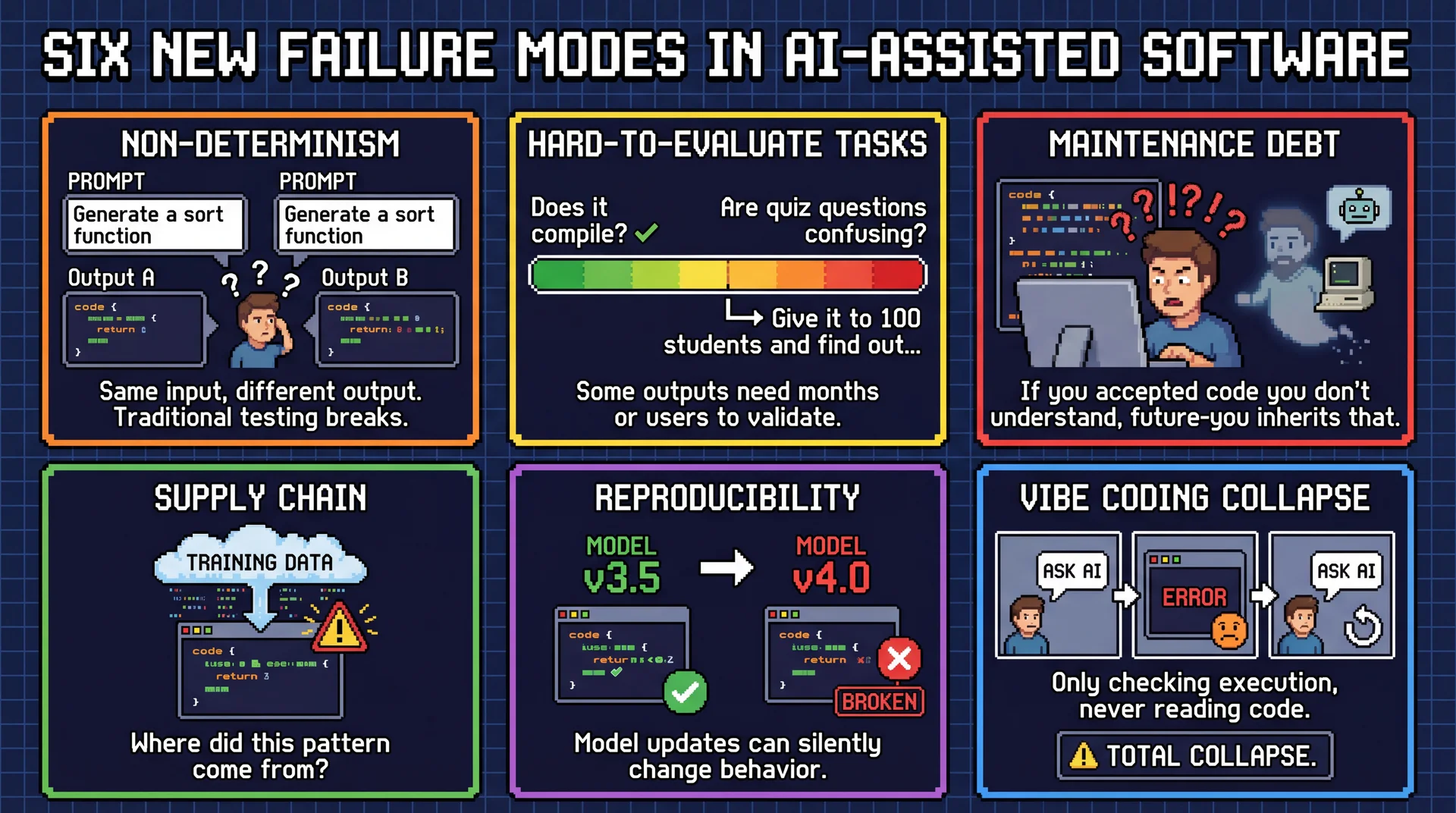

Q9: What New Engineering Challenges Does AI Create?

Reliability, testing, reproducibility, long-term maintenance?

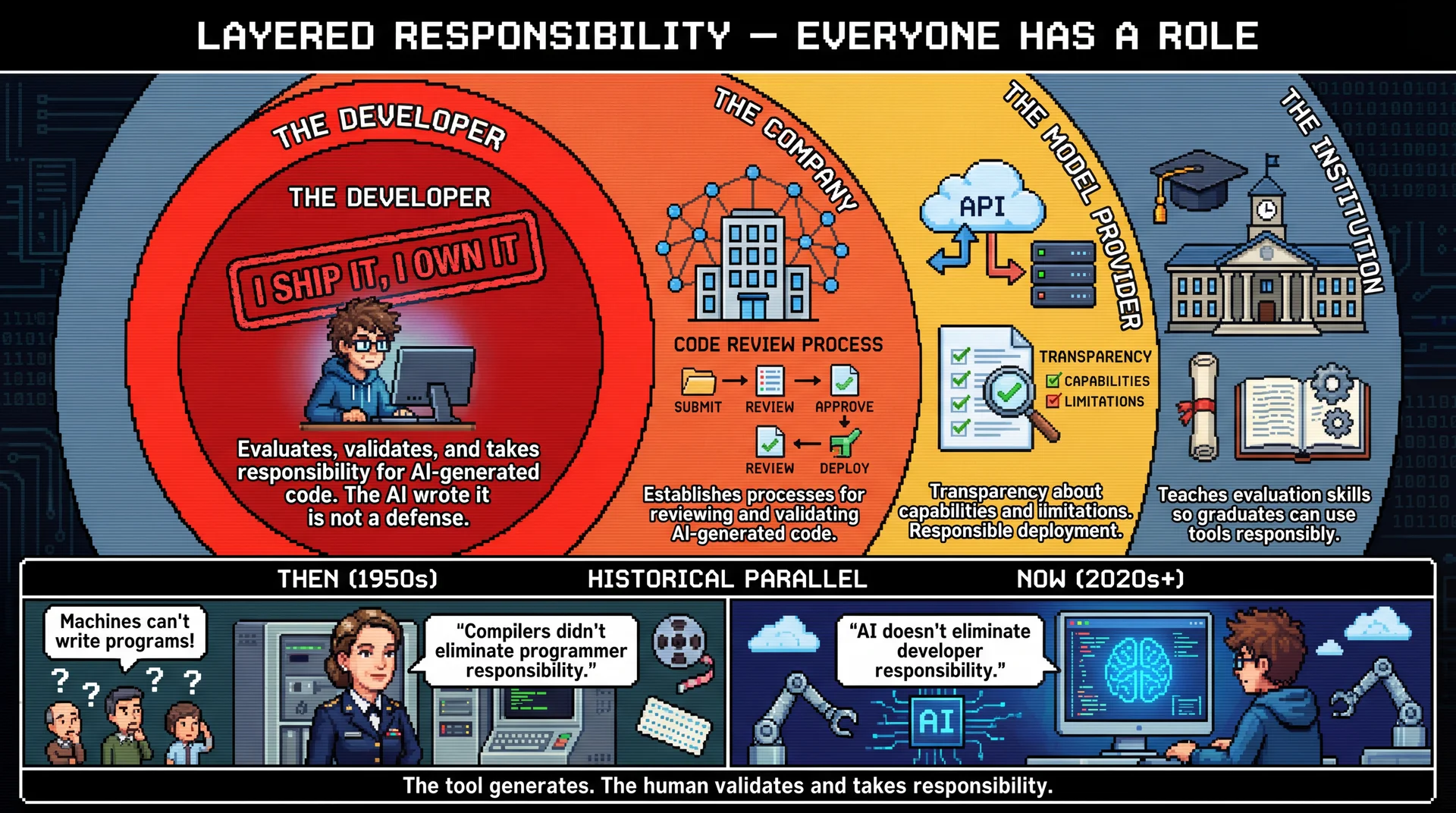

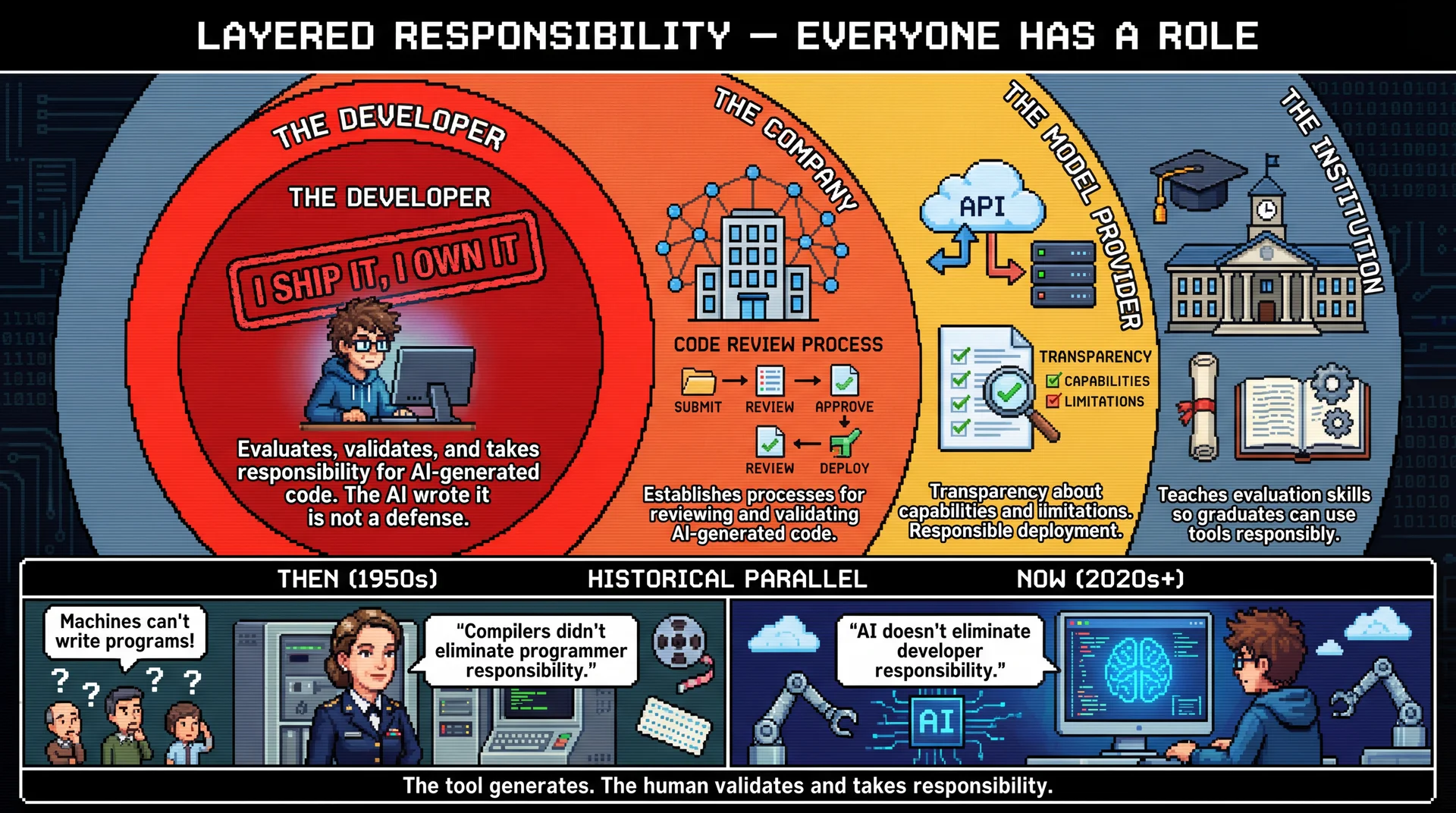

Q10: Who Is Responsible When AI Systems Cause Harm?

Developer? Company? Researcher? Institution?

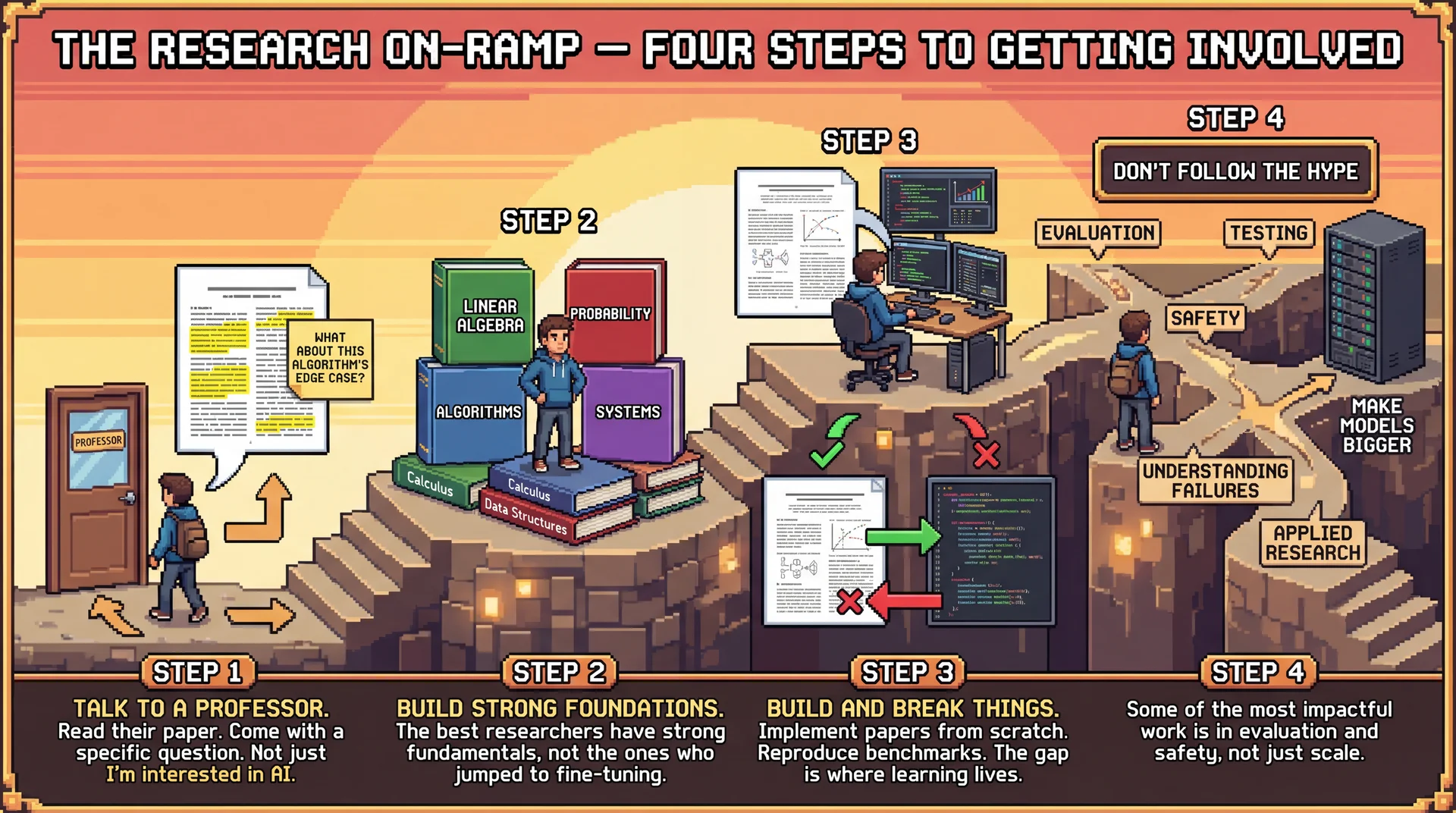

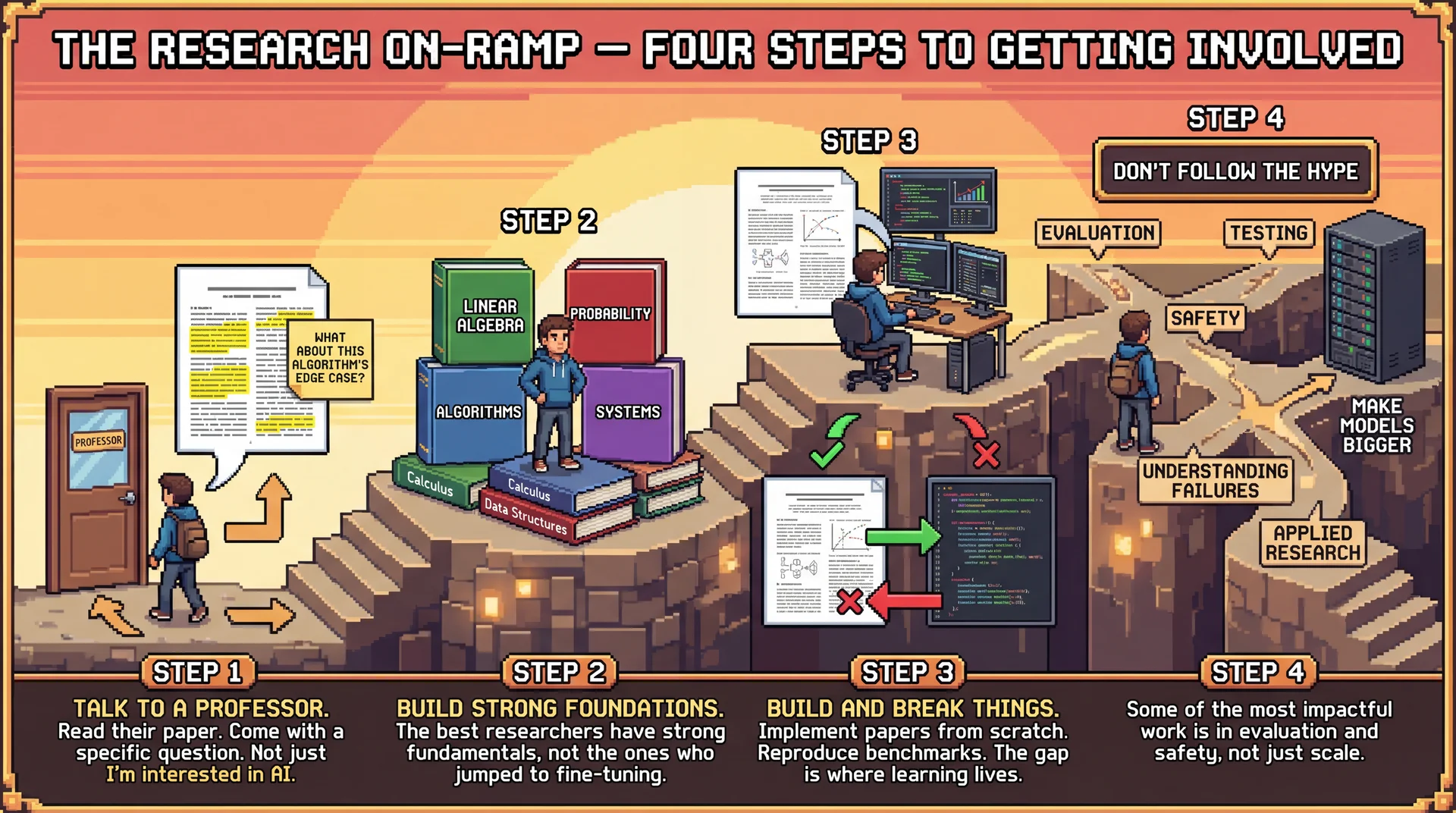

Q11: How Do Undergraduates Get Into AI Research?

What's the first concrete step?

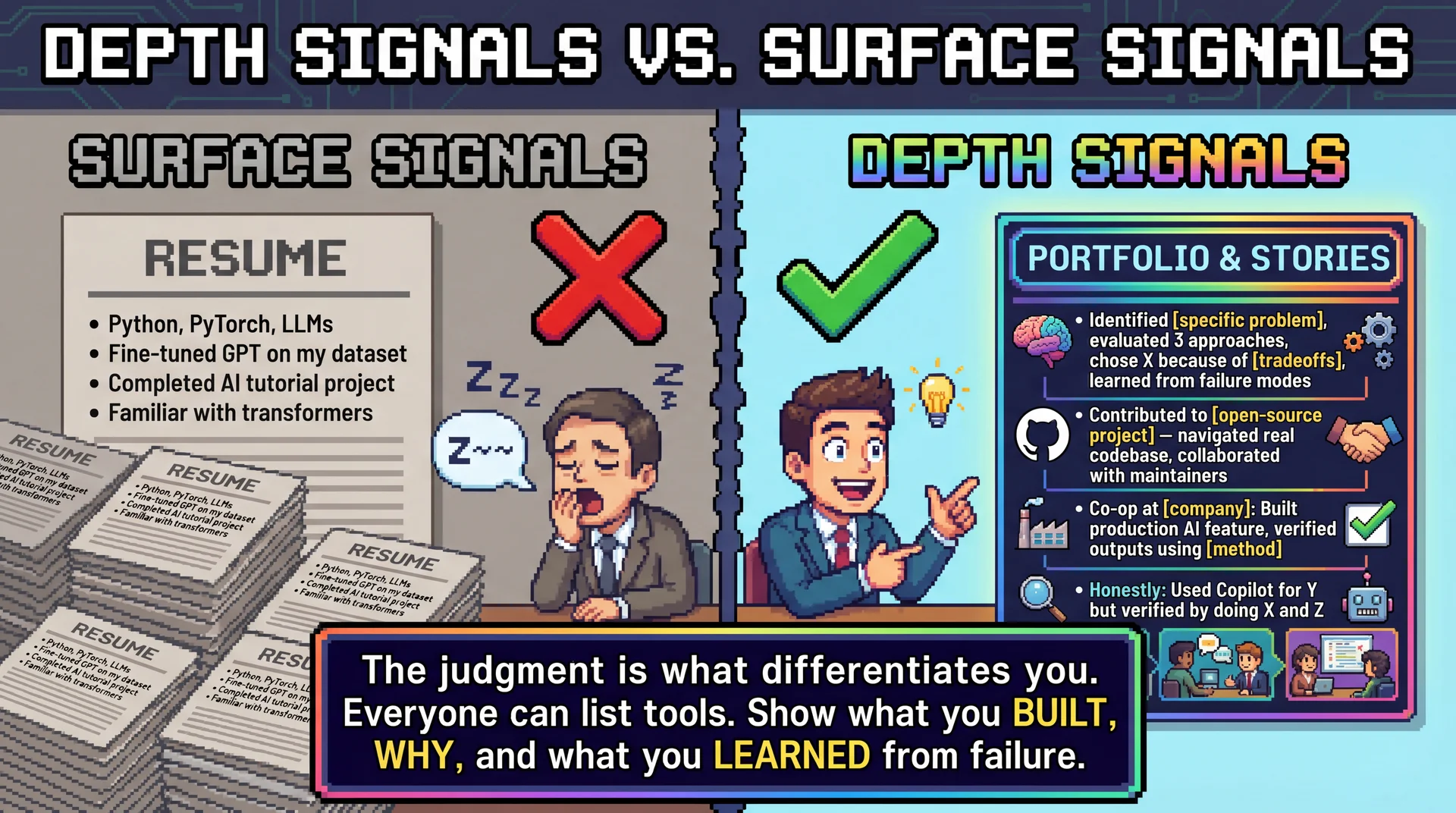

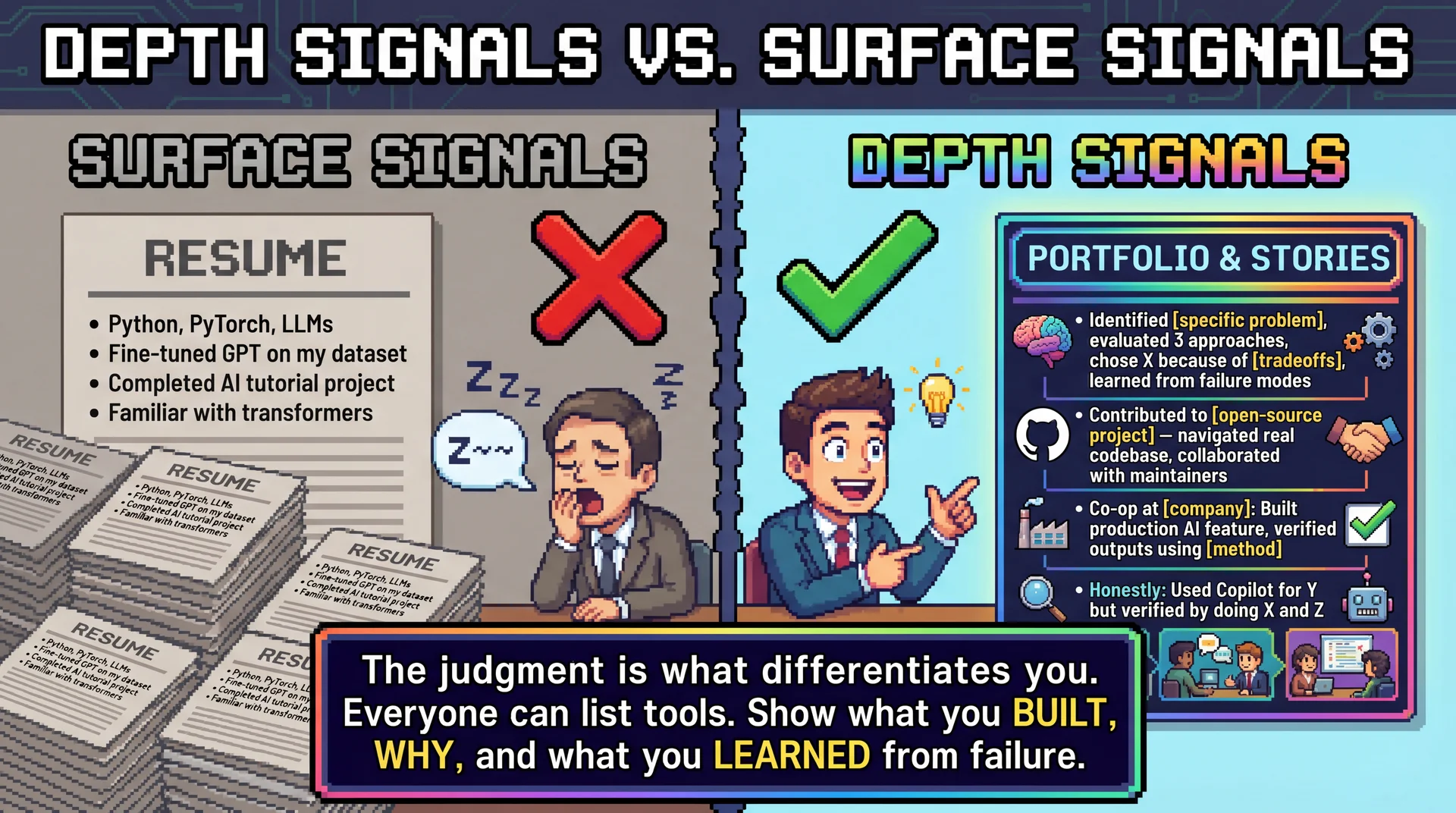

Q12: How Do You Stand Out When Everyone Lists "AI"?

What signals depth over trend-chasing?

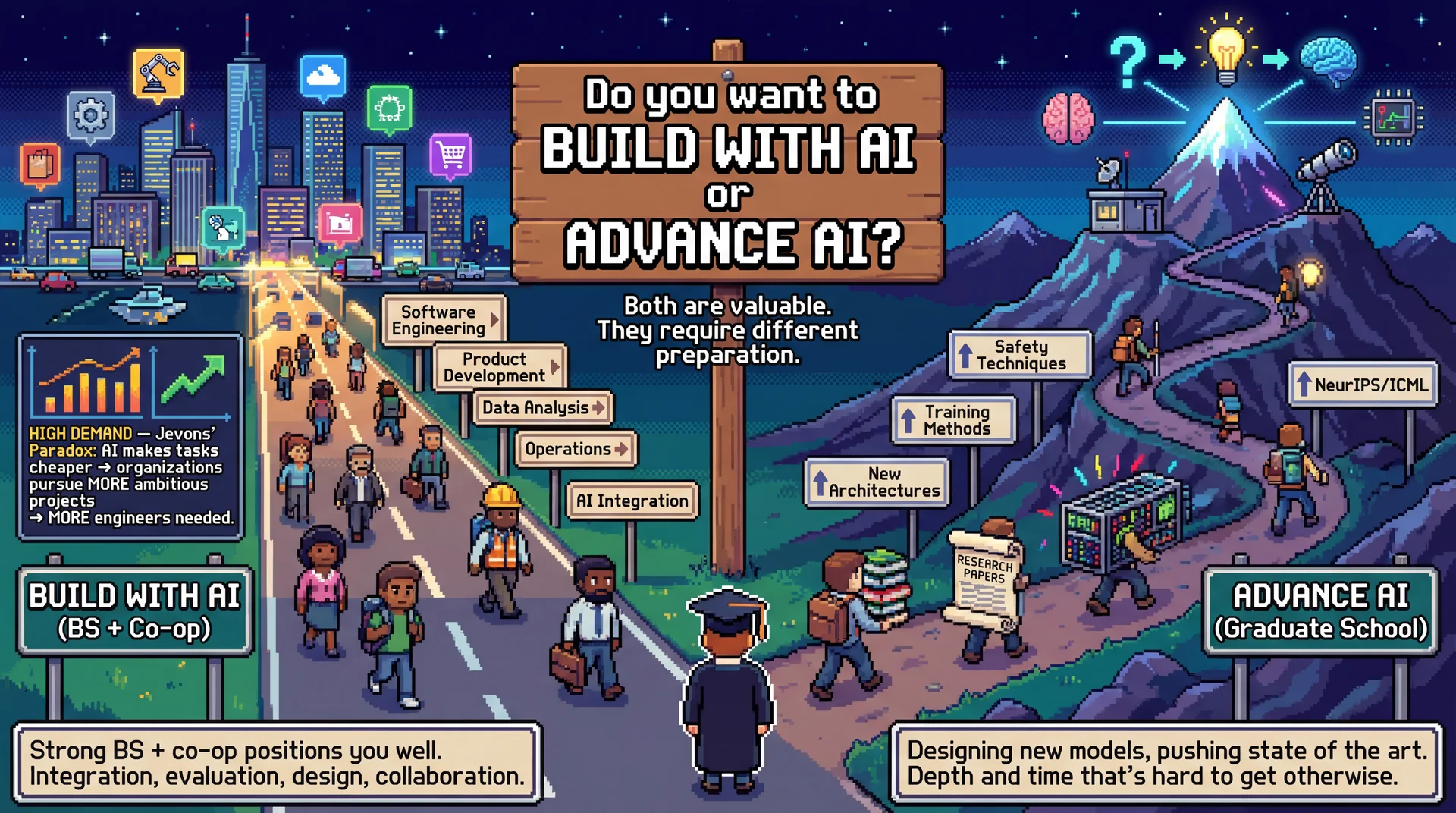

Q13: Is Graduate School Becoming Necessary for AI?

Can undergraduates still break into meaningful AI roles directly?

Q14: If You Were an Undergraduate Again Today...

What would you focus on — and what would you ignore?

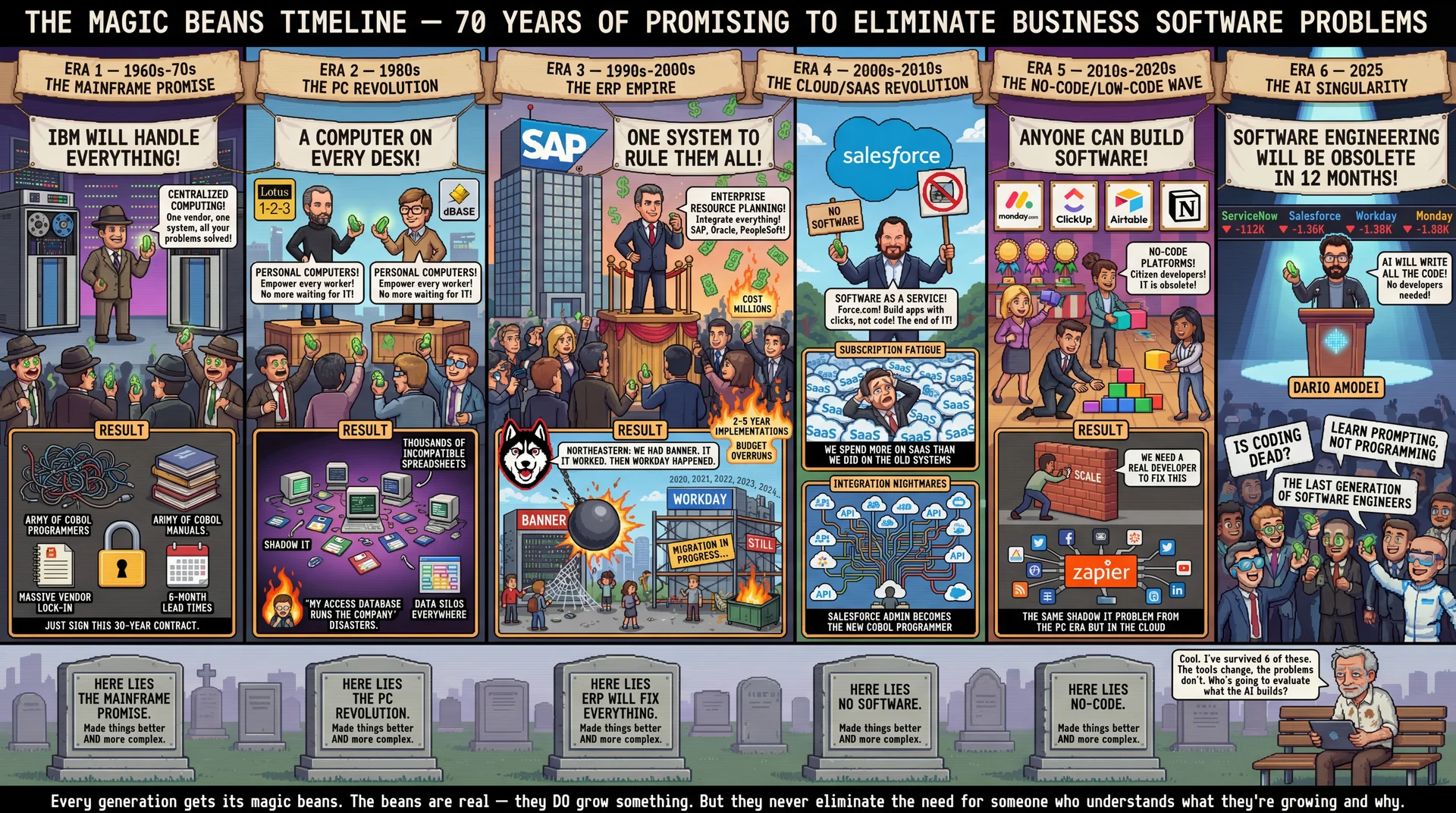

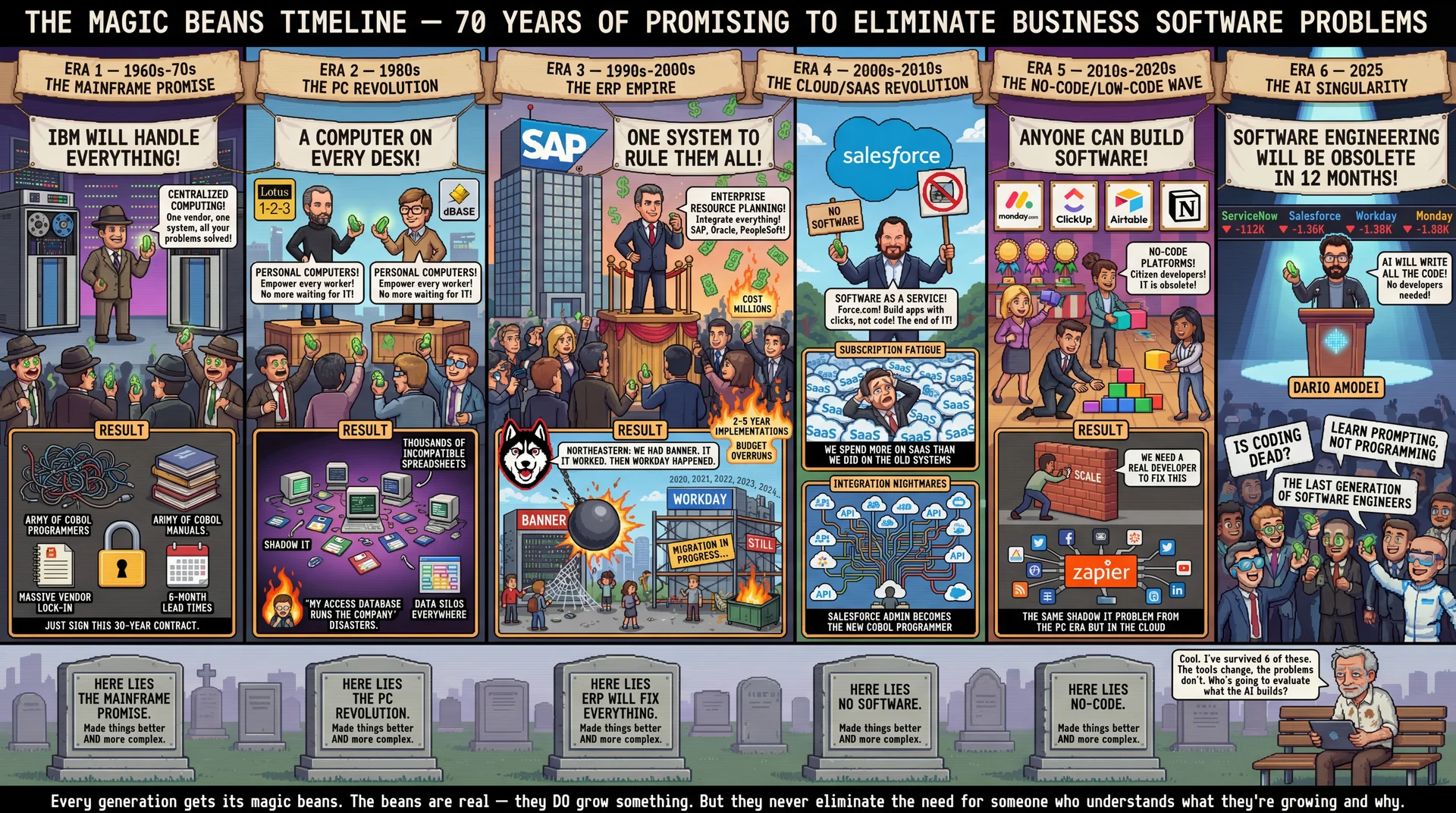

Bonus: "We'll Solve All Your Software Problems"

Dario Amodei says software engineering is obsolete in 12 months. Where have we heard this before?

Thank You — Let's Keep This Conversation Going

The through-line of every answer tonight:

- Technology changes. Responsibility doesn't.

- The hardest parts of building software have never been typing code.

- Build the judgment that makes tools valuable — then use the tools aggressively.

Stay connected:

- These conversations continue in CS 3100 — AI is woven throughout the rest of the semester

- Your survey responses and feedback directly shape what we teach next

- The UG Advisory Committee is your voice — use it