CS 3100: Program Design and Implementation II

Lecture 9: Interpreting Requirements

©2025 Jonathan Bell, CC-BY-SA

Learning Objectives

After this lecture, you will be able to:

- Explain the overall purpose of requirements analysis and why it matters

- Enumerate and explain three major dimensions of risk in requirements analysis

- Identify the stakeholders of a software module, along with their values and interests

- Describe multiple methods to elicit users' requirements

"I Need a System to Help My TAs Grade More Efficiently"

"Can you build something by next month?"

Sounds straightforward, right? But consider:

- What does "efficiently" mean?

- What specific problems are TAs facing?

- What does "grading" include?

- Is it just running tests, or also feedback, regrades, statistics?

What the Developer Built vs. What Was Actually Needed

Version 1: What was built

- Run test cases on submissions

- Return a numerical score

- Store the final grade

Version 2: What was needed

- Run automated tests on code

- Check code style compliance

- Enable inline commenting on code

- Preserve previous grading on resubmit

- Notify original graders of resubmissions

- Prevent same grader from repeatedly grading same student

- Randomly sample 10% for quality review

- Track grader workload for fair distribution

- Support multi-stage regrade requests

- Integrate with university grade system

⚠ The difference: hundreds of hours of wasted work, frustrated users, missed deadlines.

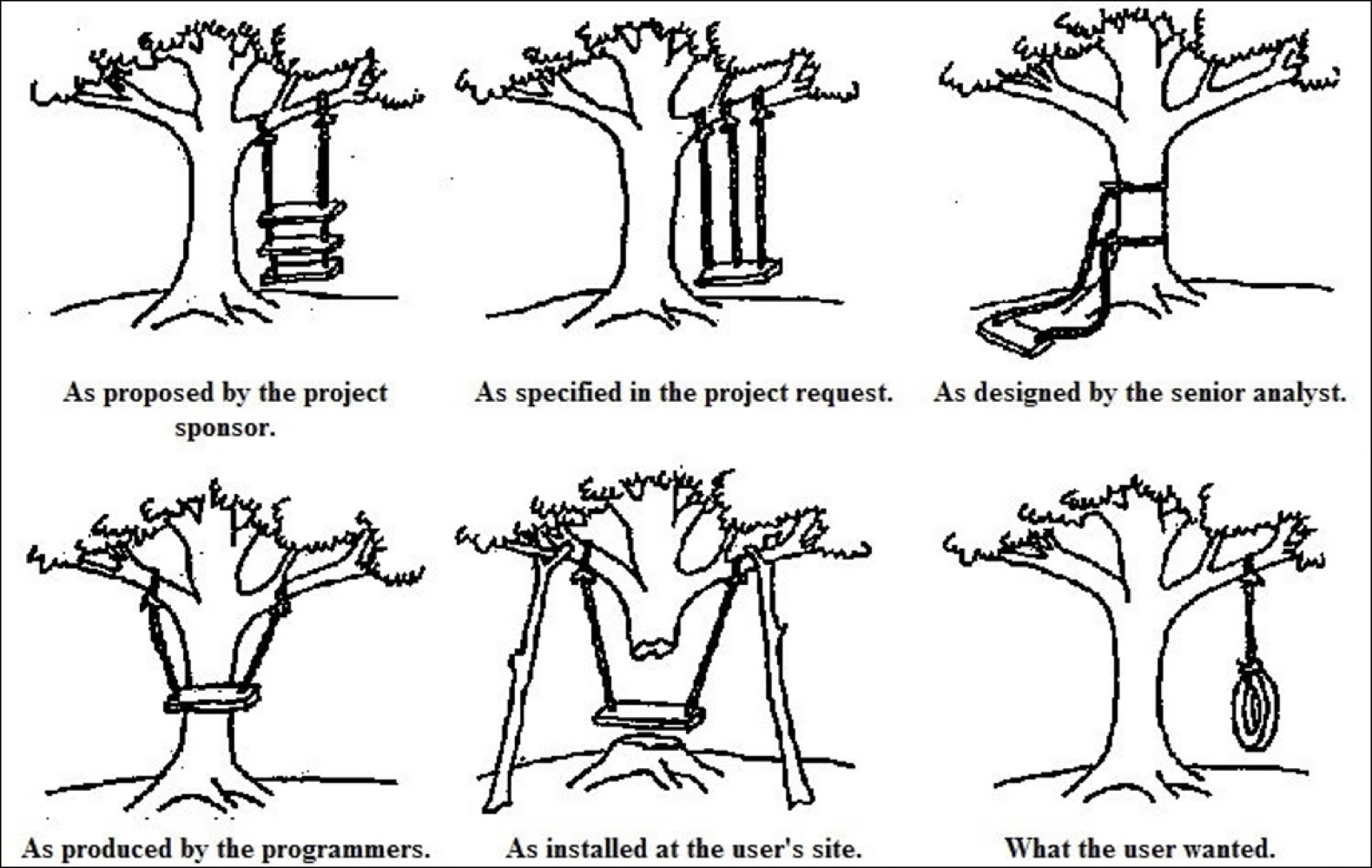

The Requirements Communication Problem Is Not New

Versions of this image have supposedly circulated since the early 1900s. Christopher Alexander used it in The Oregon Experiment (1975).

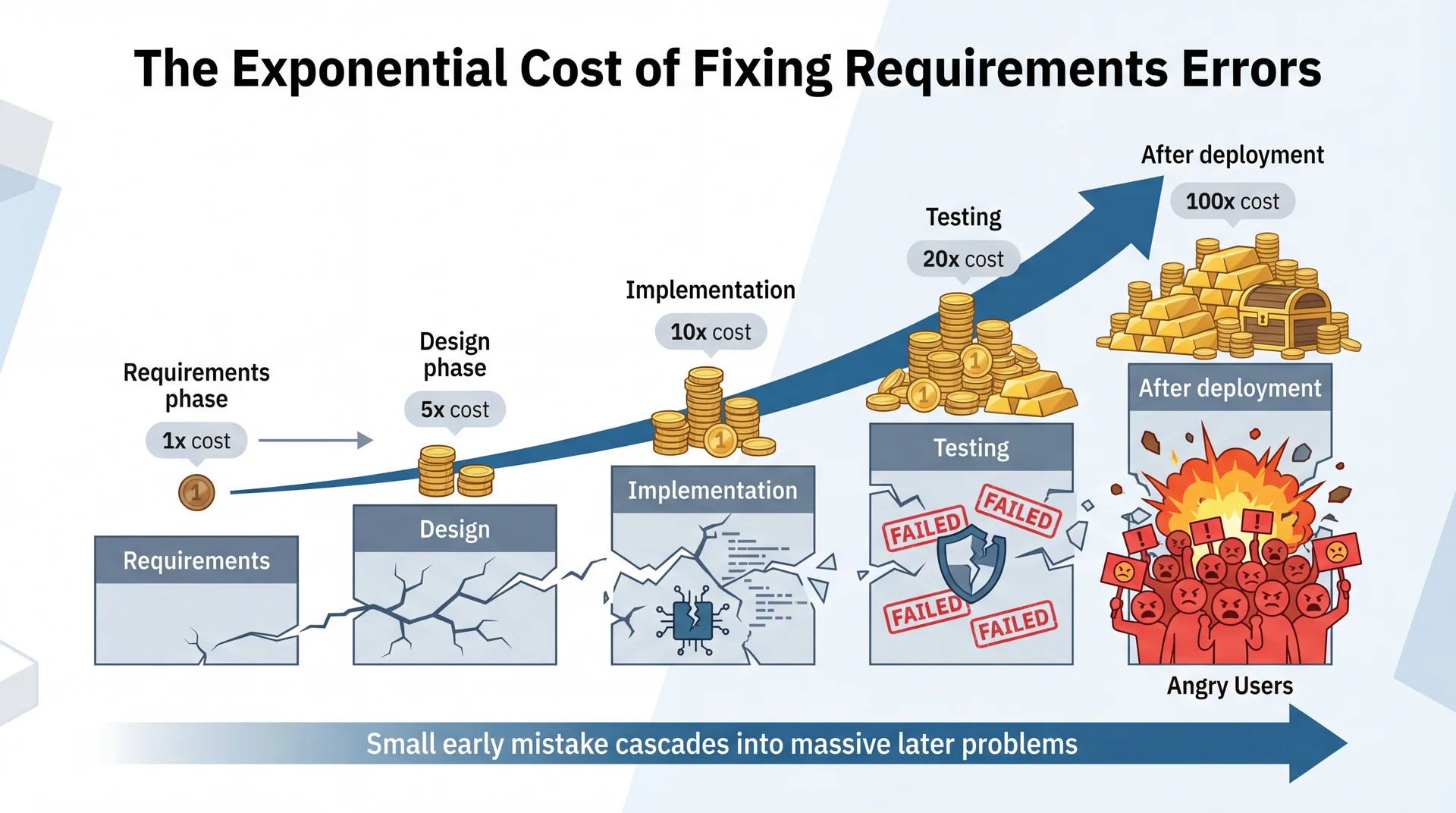

The Cost of Fixing Requirements Errors Grows Exponentially

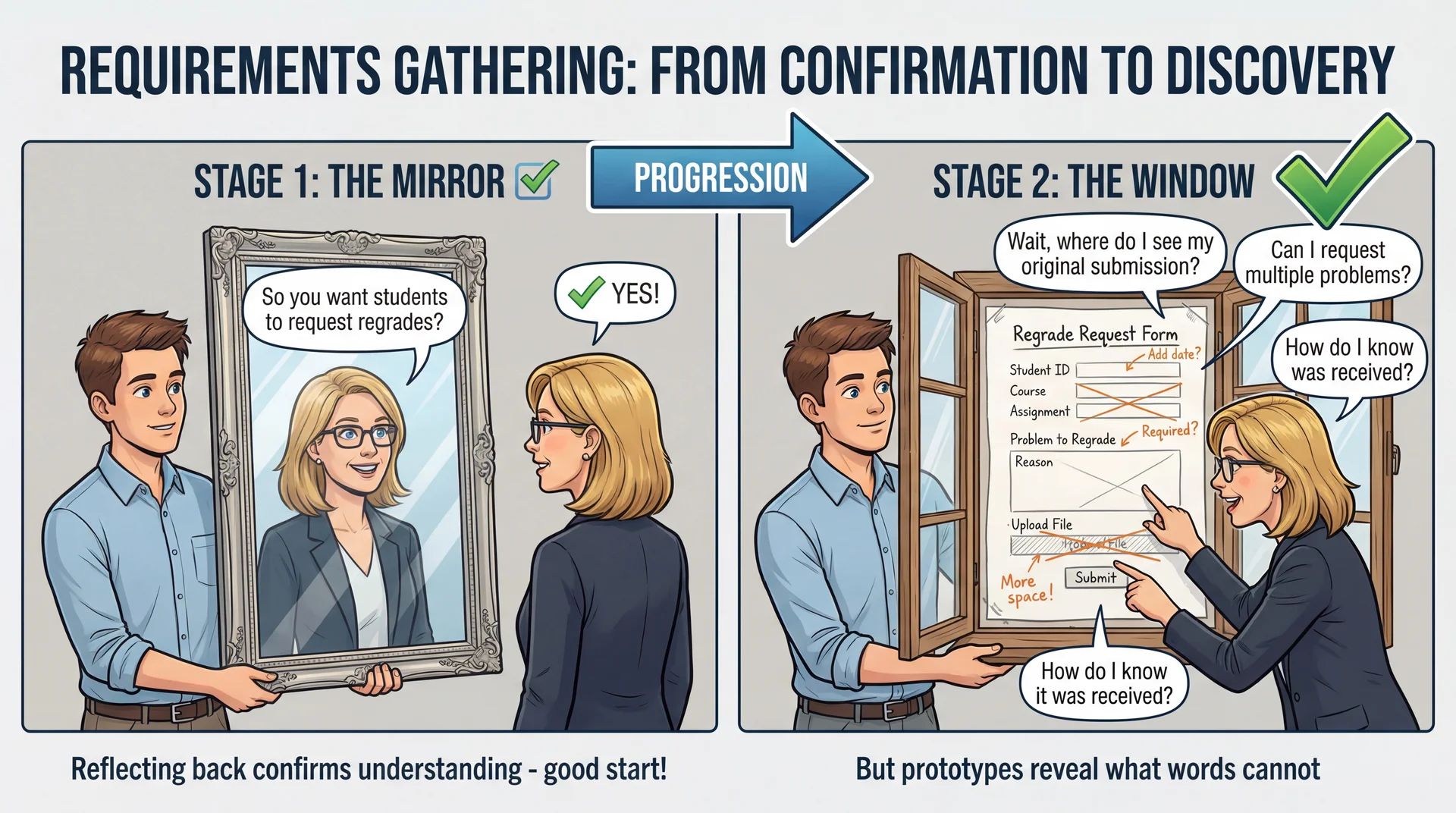

Requirements Analysis Is Not Just "Asking What They Want"

Users often:

- Don't know what they want until they see it

- Can't articulate their needs clearly

- Focus on solutions instead of problems

- Have conflicting needs with other stakeholders

- Change their minds as they learn more

Key insight: Users are experts in their domain. The goal is to engage them as design partners, not extract requirements like mining ore.

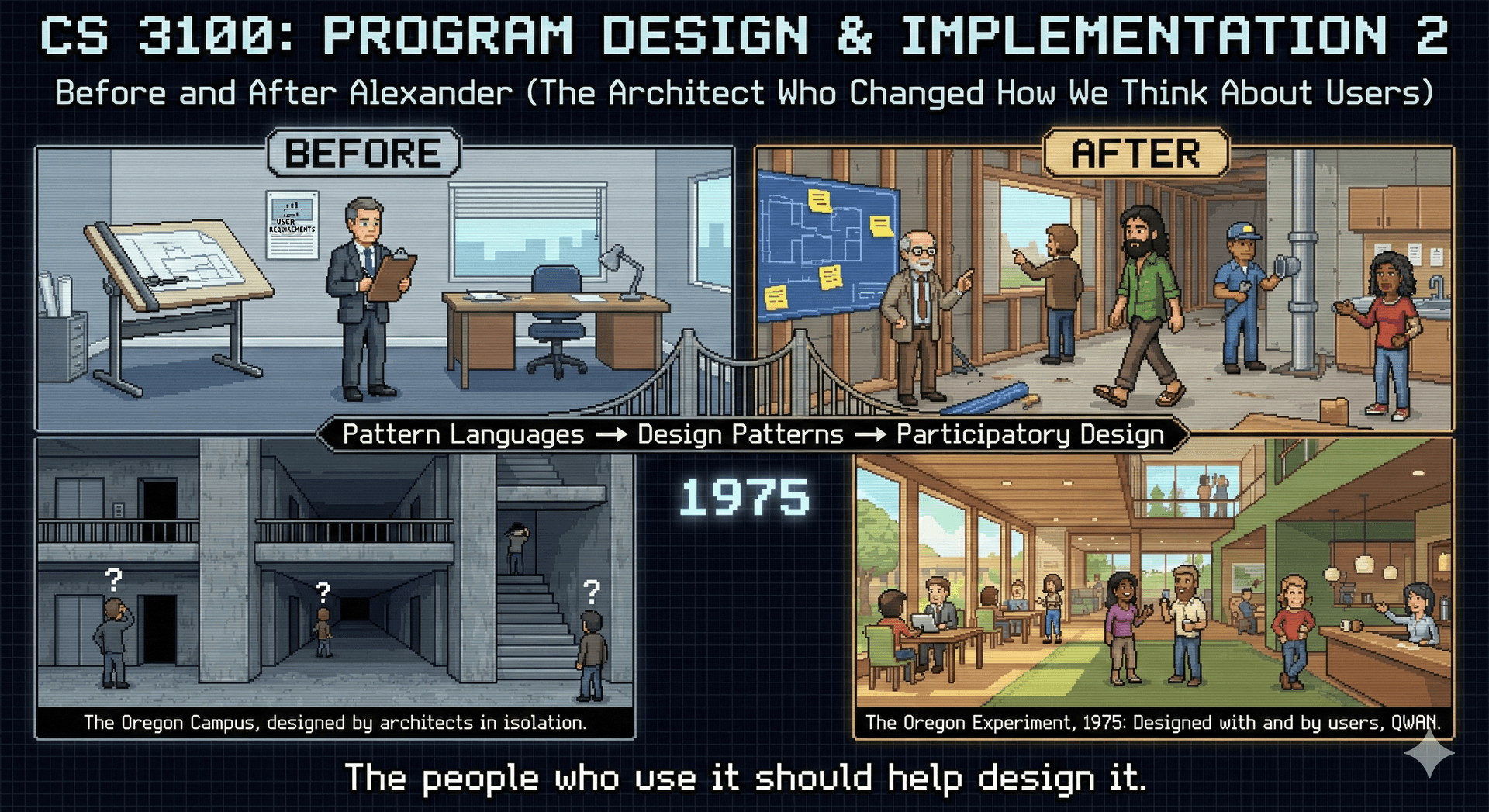

Extractive vs. Participatory Approaches

Extractive Approach ❌

Dev: "What features do you need?"

Prof: "I need automated grading."

Dev: "OK, I'll build an autograder."

[Much Later...]

Prof: "This isn't what I wanted!"

Participatory Approach ✓

Dev: "Tell me about your grading challenges."

Prof: "I spend weekends grading, students complain about inconsistency."

Dev: "Can you show me the process?"

Prof: [Shows] "I check correctness, style, approach..."

Dev: "What if we automated mechanical parts so you could focus on pedagogy?"

Prof: "That would change how I design assignments!"

Collaborative Discovery Reveals Hidden Requirements

Professor: "I need the system to be fair."

You: "What does 'fair' mean to you?"

Professor: "Students complain some TAs grade harder than others."

You: "How would you like to address that?"

Professor: "Maybe... standardize the grading somehow?"

You: "Would a rubric help? Multiple graders? Statistical normalization?"

Professor: "Oh! Actually, the real problem might be TAs interpret my rubric differently..."

You: "What if TAs could see each other's grading on example submissions?"

Professor: "Like a calibration exercise? That's brilliant!"

✓ Neither party had this solution in mind—it emerged from collaboration.

Shared Language Enables Ongoing Collaboration

- Professor stops saying "the system should be fair"

- Professor starts saying "we need inter-rater reliability"

- Developer stops thinking in databases

- Developer starts thinking in rubrics and learning outcomes

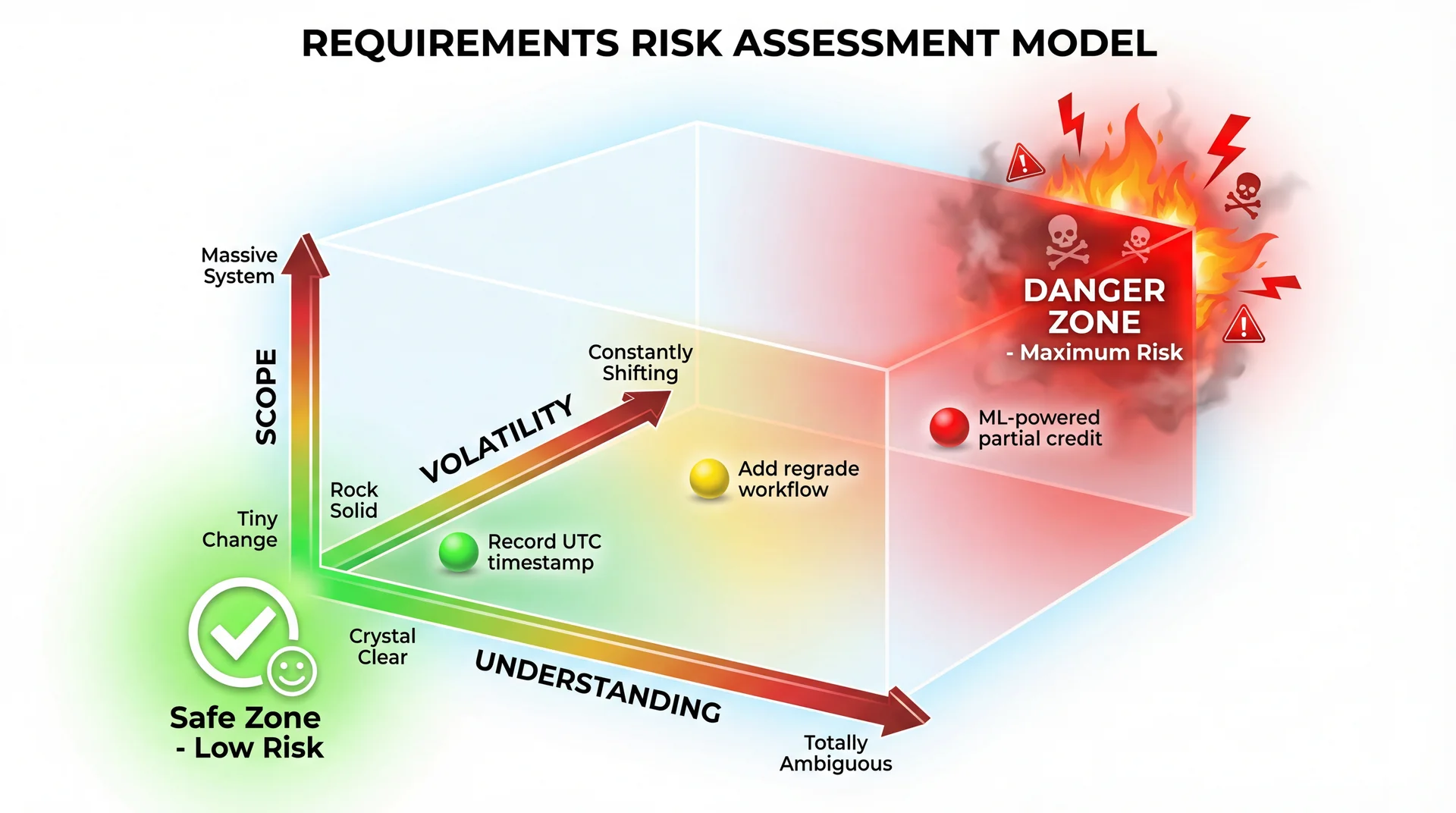

Requirements Analysis Is Fundamentally About Managing Risk

Every requirement carries risks. Understanding these risks helps you focus effort where it matters most.

Risk Dimension 1: Understanding

How well do we understand what's needed?

Low Understanding Risk ✓

"The system shall record the UTC timestamp when a submission is received."

Clear, unambiguous, everyone knows what a timestamp is.

High Understanding Risk ❌

"The system shall ensure grading quality through meta-reviews."

What's a meta-review? Who does it? When? What constitutes "quality"?

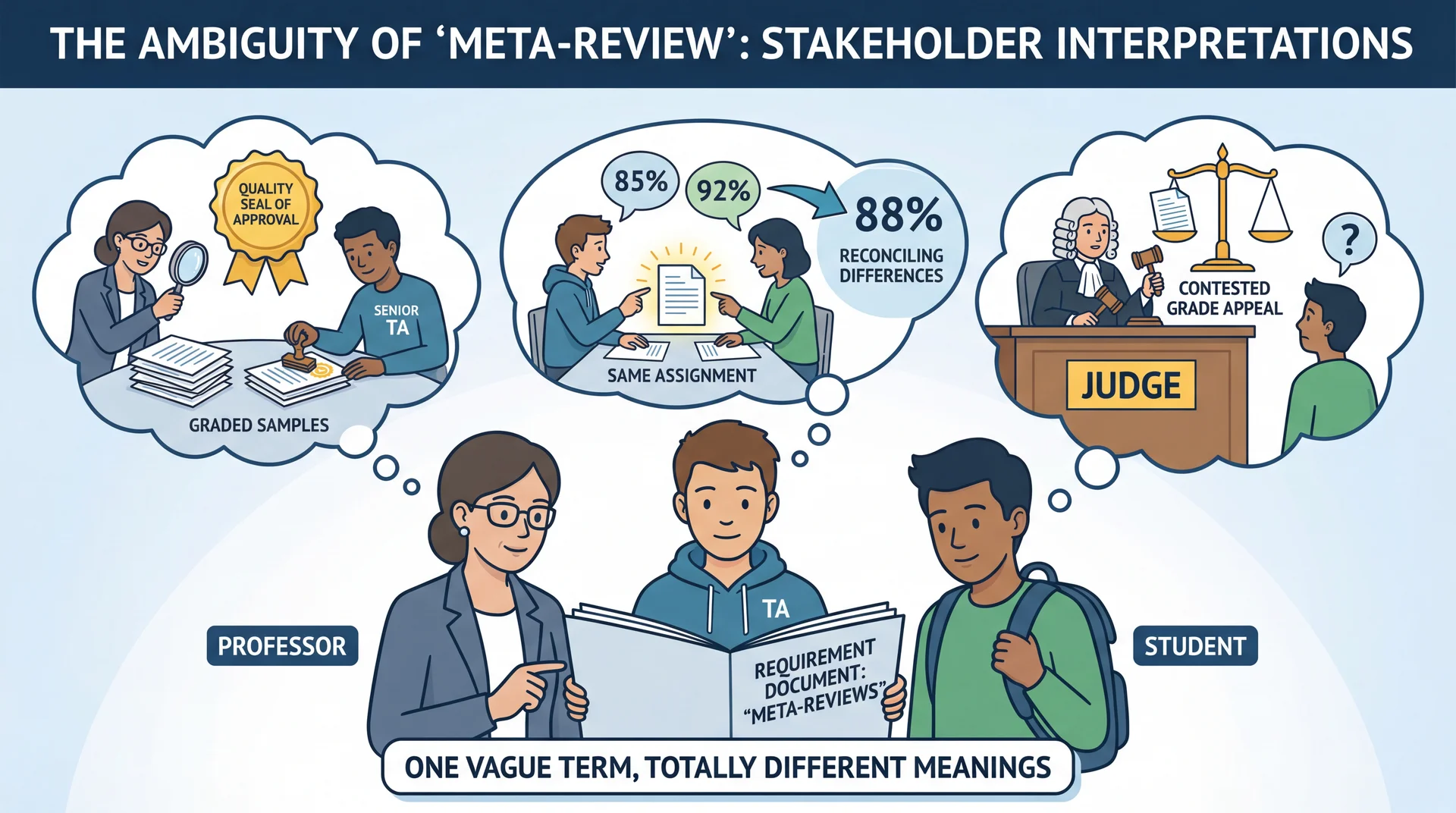

Different Stakeholders Interpret the Same Term Differently

⚠ One vague term, three completely different interpretations.

Risk Dimension 2: Scope

How much are we trying to build?

Low Scope Risk ✓

"Students have a fixed number of late tokens, each allowing a 24-hour extension."

Small, isolated change with clear boundaries.

High Scope Risk ❌

"Students can request regrades for any grading decision, which can be escalated to instructors."

Sounds like one feature, but what does it really mean?

Poll: Regrade Requests

If you were asked to design a regrade request system, what questions would you ask to better understand the requirements?

One Feature Hides Dozens of Decisions - Regrades

Visible requirements:

- Students can request regrades

- Requests include justification

- Original grader reviews requests

- Decisions can be appealed

Hidden requirements:

- Who can request? (Student only? Team members?)

- When can they request? (Immediately? Cooling period? Before finals?)

- How many requests allowed? (Per assignment? Per semester?)

- What's the escalation path? (Grader → Meta-grader → Instructor?)

- What prevents gaming? (Shopping for easy grader?)

- How are groups handled? (One request per group? Individual?)

- What about concurrent modifications?

- What audit trail is needed?

⚠ Each question represents additional scope. What seemed like one feature is actually dozens of interconnected decisions.

Risk Dimension 3: Volatility

How likely are requirements to change?

Low Volatility Risk ✓

"Use the university's standard letter grading scale (A, A-, B+, B, B-, ...)"

Stable for decades, unlikely to change.

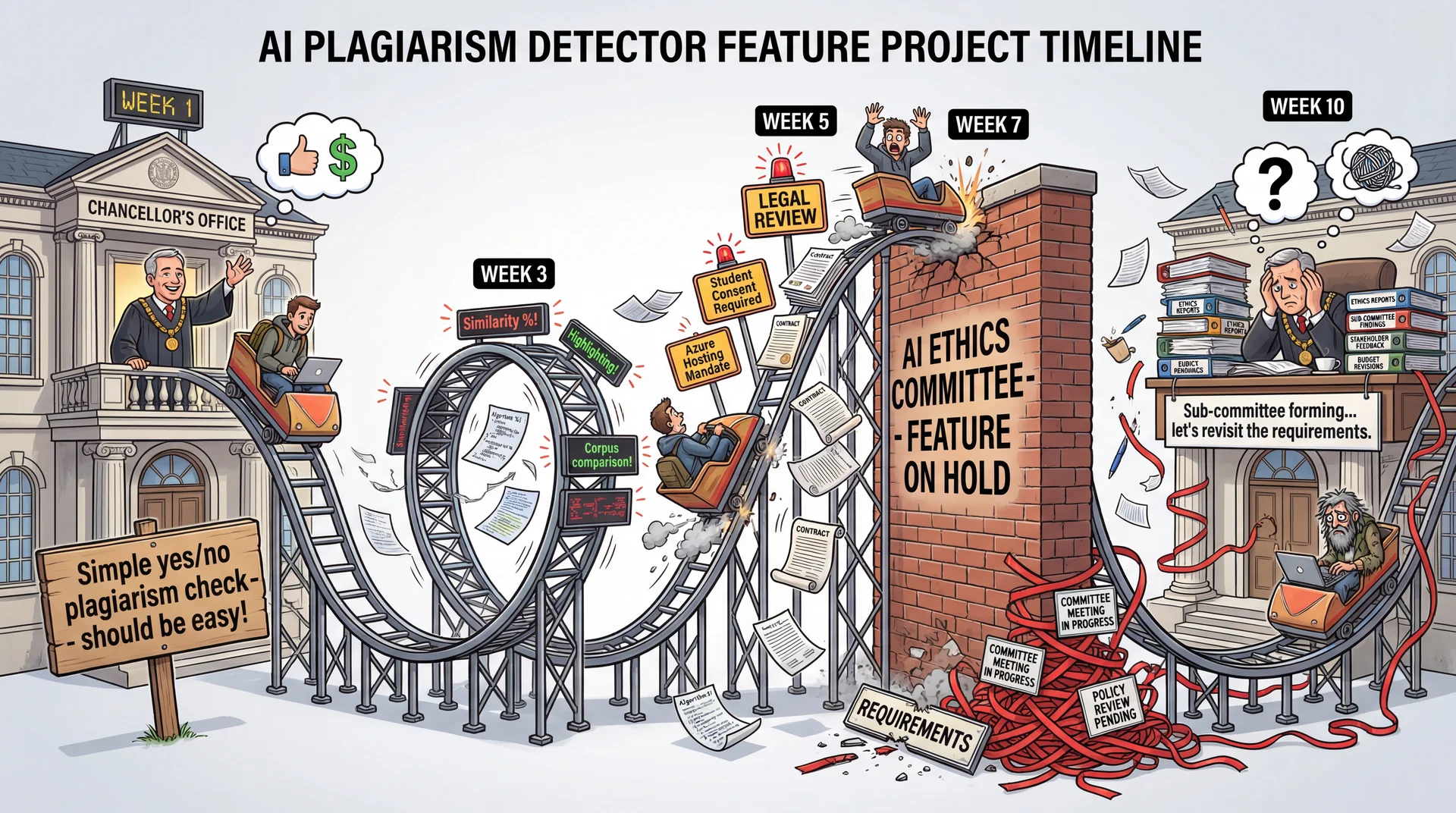

High Volatility Risk ❌

"Integrate with the university's new AI-powered plagiarism detector currently in contract negotiations with three vendors."

Unstable API, multiple vendors, external organization control.

Volatile Requirements Evolve Unpredictably

Sources of volatility: External dependencies, regulatory requirements, political factors, technical uncertainty, market changes.

High-Risk Requirements Score High on Multiple Dimensions

Consider this requirement:

"The system shall use machine learning to automatically assign partial credit in a way that's pedagogically sound and legally defensible."

| Dimension | Risk Level | Why |

|---|---|---|

| Understanding | HIGH | What is "pedagogically sound"? "Legally defensible"? What ML? |

| Scope | HIGH | Training data, scaling to new assignments, ML pipeline, evaluation, legal review, appeals system |

| Volatility | HIGH | ML evolves, legal requirements change, pedagogy is debated |

Poll: Stakeholders

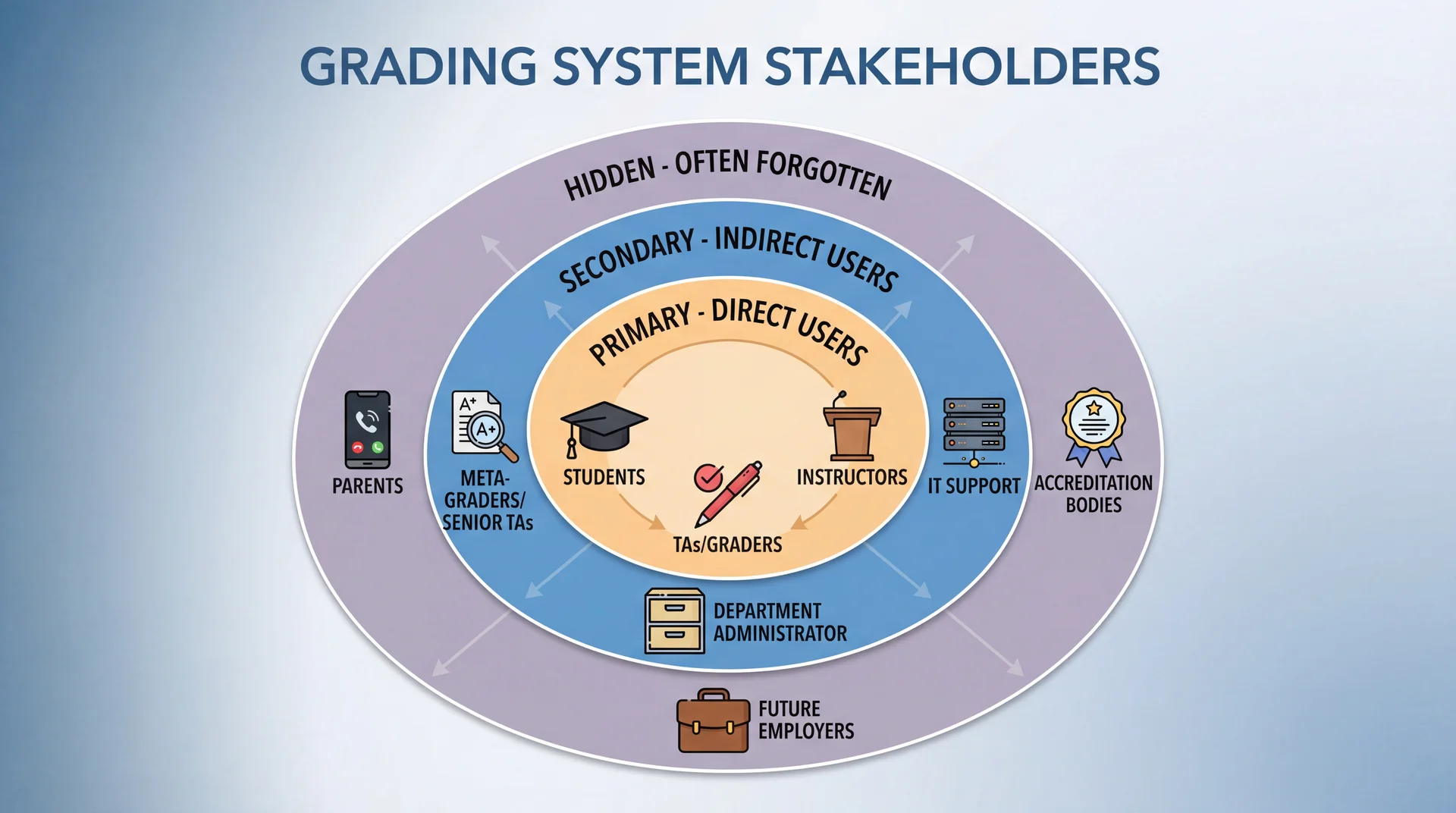

Who are stakeholders for an online grading system?

Give short answers for a word cloud.

A Stakeholder Is Anyone Affected By or Affecting the System

Missing a key stakeholder is like designing a building without talking to the people who will live in it.

Primary Stakeholders Have Different Values and Fears

| Stakeholder | Primary Concern | Values | Fears |

|---|---|---|---|

| Students | "Will I get the grade I deserve?" | Fairness, transparency, timeliness | Biased grading, lost submissions, harsh TA |

| Graders (TAs) | "Can I grade fairly without losing my weekend?" | Efficiency, accuracy, workload balance | Overwhelming workload, hostile regrades |

| Instructors | "Are students learning and evaluated fairly?" | Educational outcomes, integrity, oversight | Grade complaints, inconsistent grading |

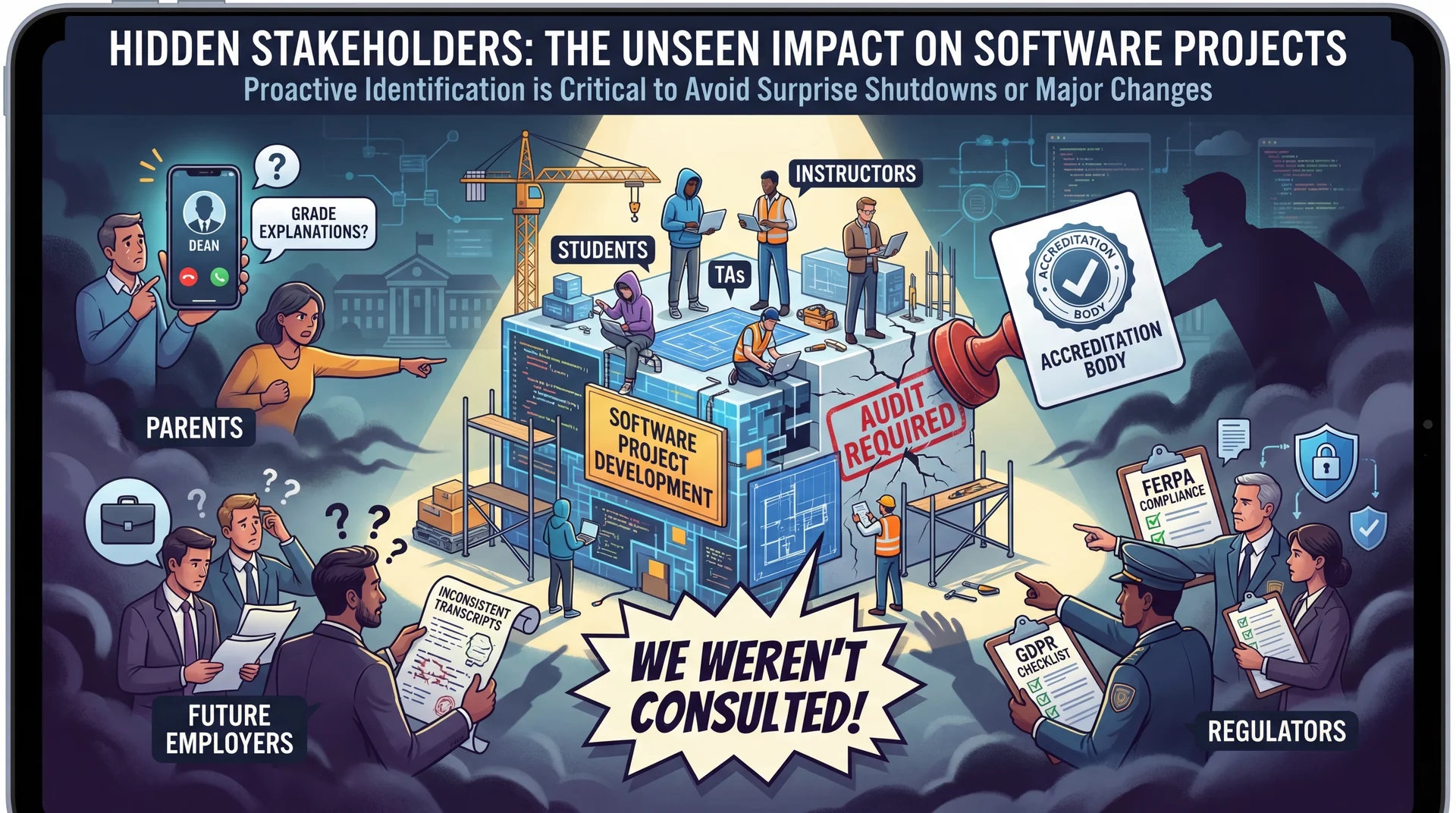

Hidden Stakeholders Can Derail Projects

- Parents: May call demanding grade explanations

- Future Employers: Rely on grades as competence signals

- Accreditation Bodies: Can shut down programs that don't meet standards

Poll: Stakeholder Conflicts

Identify a conflict between students and graders.

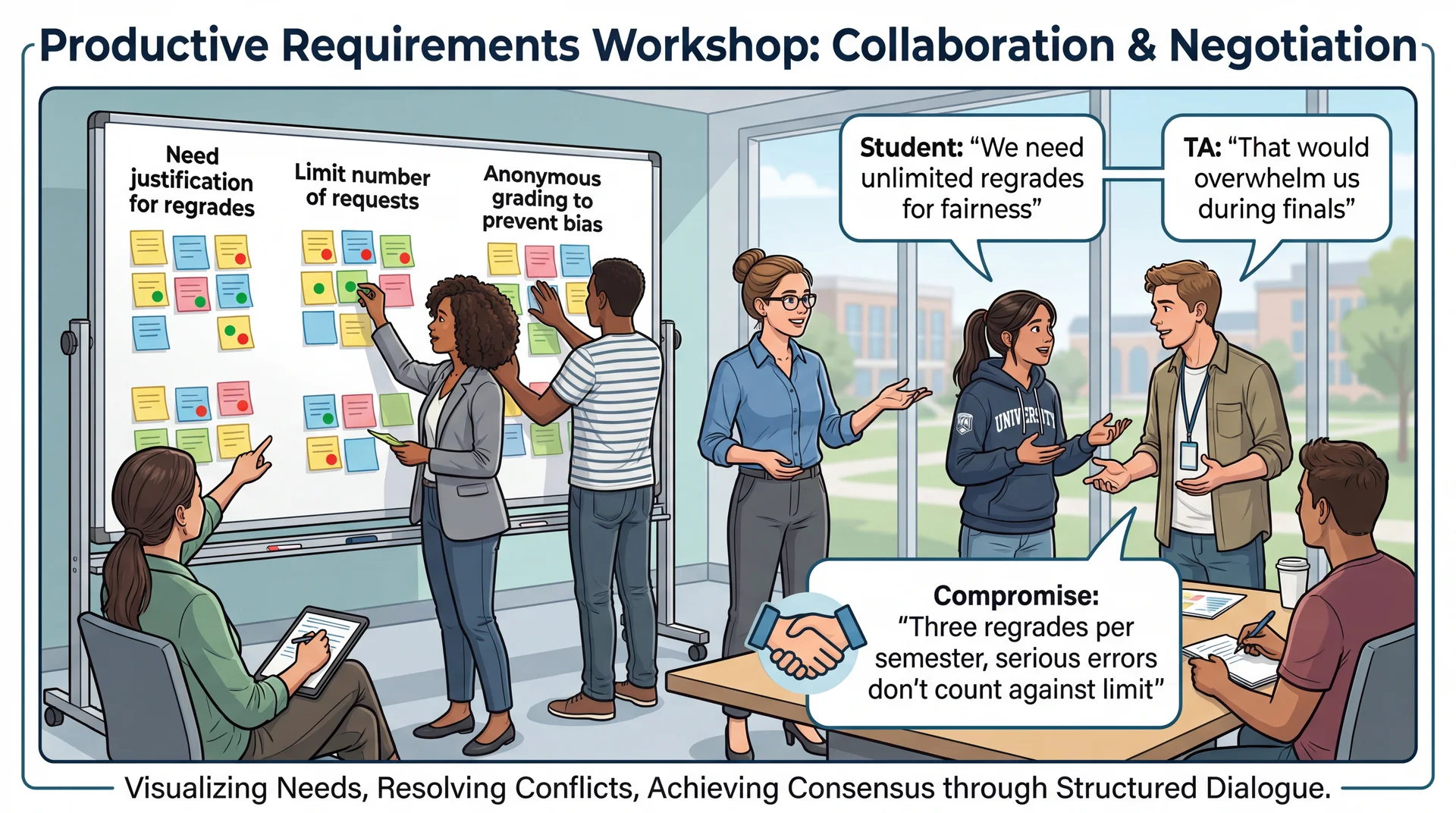

Stakeholder Interests Often Directly Conflict

| Conflict | Stakeholder 1 | Stakeholder 2 |

|---|---|---|

| Regrades | Students want unlimited requests for fairness | TAs want protection from frivolous requests |

| Analytics | Instructors want to audit all student code for trojan horses | Students want privacy of mistakes |

| Automation | TAs want fully automated grading | Instructors want nuanced evaluation |

| Feedback timing | Fast students want immediate feedback | TAs need to batch for efficiency |

| Audit trails | Administrators want detailed logs | TAs want quick, simple grade entry |

Resolving conflicts is a negotiation skill—understanding what each party truly needs and finding creative solutions.

Balancing Competing Stakeholder Needs

Example: Designing Pawtograder's regrade request feature

| Stakeholder | Need | Solution Element |

|---|---|---|

| Student | Easy ability to request regrades | Simple request form in Pawtograder |

| TA | Protection from frivolous requests | 7-day time limit after grades posted |

| Instructor | Oversight of grading quality | 3-day appeal window to instructor if unsatisfied |

| Administrator | Compliance and audit trail | Complete log of all regrade activities |

✓ A good solution addresses all concerns, not just the loudest voice.

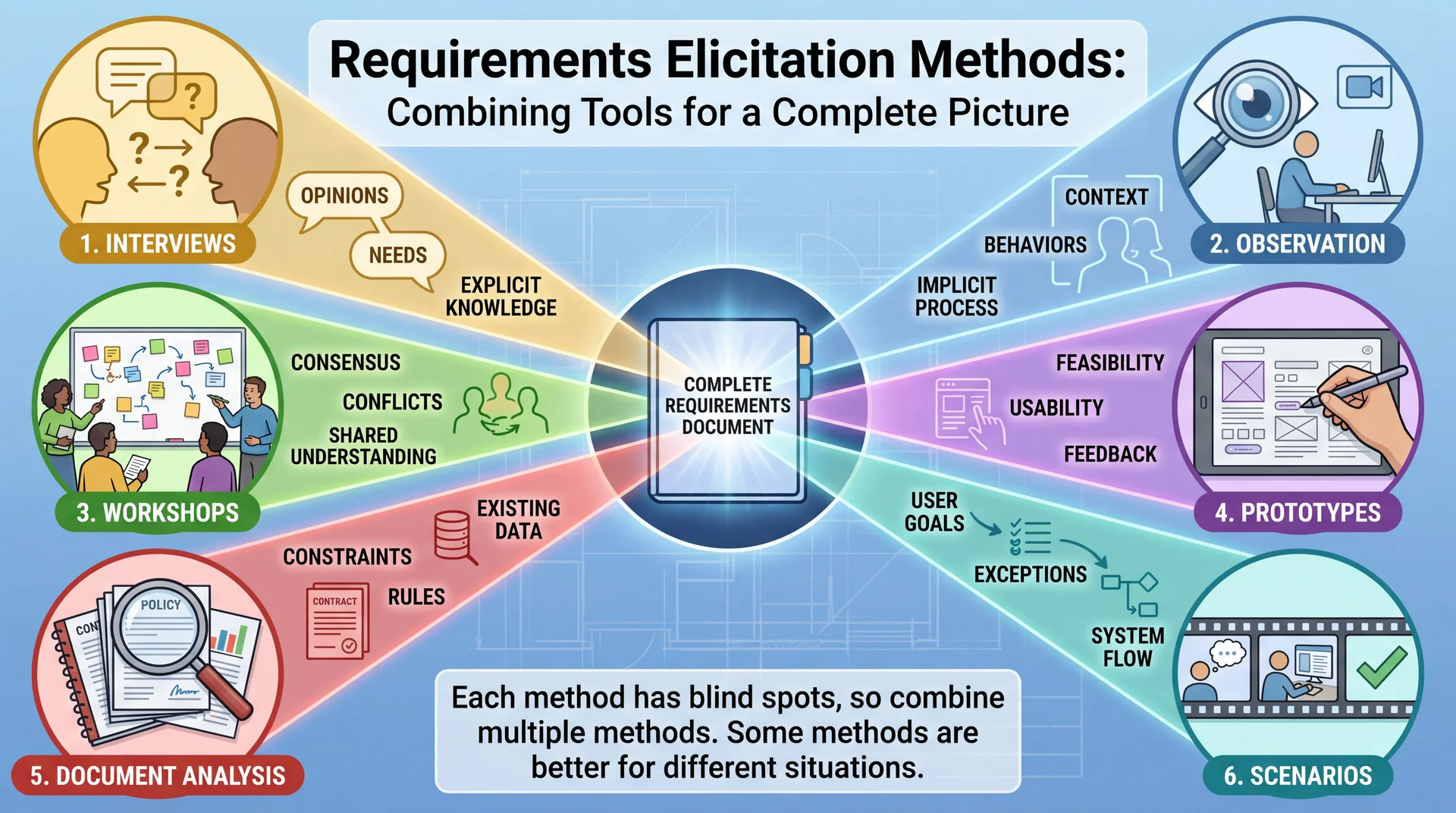

Different Methods Reveal Different Information

Method 1: Interviews Reveal What Stakeholders Say

Q: Walk me through grading a typical assignment from start to finish.

"I download submissions from Canvas, run them locally, open a spreadsheet, test each function manually..."

Q: What takes the most time?

"Setting up the test environment for each student's code, and writing the same feedback over and over."

Q: Tell me about the last time grading went really badly.

"A student submitted at 11:59 PM, I graded at midnight, but they claimed they resubmitted at 12:01 AM and I graded the wrong version..."

Techniques: Open-ended questions, critical incident technique, probing, silence

Method 2: Observation Reveals What Stakeholders Actually Do

| Time | Observed Activity |

|---|---|

| 2:00 PM | TA logs into Canvas, downloads submission ZIP |

| 2:05 PM | Discovers some submissions are .tar.gz (unexpected!) |

| 2:08 PM | Writes quick script to handle multiple archive formats |

| 2:15 PM | Opens first submission, realizes it's Python 2 not Python 3 |

| 2:18 PM | Sets up separate testing environment for Python 2 |

| 2:20 PM | Manually copies test cases from assignment PDF |

| 2:30 PM | Student order in spreadsheet doesn't match Canvas |

| 2:37 PM | Phone notification interrupts flow |

| 2:38 PM | Loses track of which submission was being graded |

⚠ File format issues, manual test copying, and interruptions weren't mentioned in interviews.

Method 3: Workshops Resolve Conflicts Early

Benefits: Stakeholders hear each other directly, creative solutions emerge, conflicts surface and resolve early, builds buy-in.

Method 4: Prototypes Make Abstract Ideas Concrete

Method 5: Document Analysis Reveals Hidden Constraints

Documents to analyze:

- Grading rubrics → evaluation criteria and point distributions

- Email complaints → common pain points and misunderstandings

- Grade appeal forms → existing process and requirements

- TA training materials → hidden complexities

- University policies → compliance constraints

- Previous syllabi → evolution of grading policies

Example from CS 3100 syllabus:

"All regrade requests must be submitted within 7 days from your receipt of the graded work. If your regrade request is closed and you feel that the response was not satisfactory, you may appeal to the instructor via Pawtograder within 3 days."

Requirements discovered: 7-day time limit after receipt (not posting), written form via Pawtograder, escalation path to instructor, 3-day appeal window, implies graders are not instructors.

Method 6: Scenarios Reveal Edge Cases

Scenario: End-of-Semester Grade Dispute

Sarah, a senior, needs a B+ to keep her scholarship. She receives a B after the final assignment. Checking her grades, she notices Assignment 3 seems low. She reviews the rubric and believes her recursive solution was incorrectly marked wrong.

She initiates a regrade request on December 15th. The original TA, Tom, is on winter break. The system escalates to the instructor, who must respond within 48 hours due to grade submission deadlines. The instructor realizes they never saw Sarah's original grade—they need visibility into all grading decisions, not just escalations...

Requirements revealed: Instructor visibility into all grades (not just appeals!), escalation when grader unavailable, urgency handling, time-sensitive responses.

Combine Methods for Complete Requirements

- Start broad: Interview stakeholders for general needs

- Get specific: Observe actual work to see hidden complexities

- Resolve conflicts: Run workshops with multiple stakeholders

- Validate understanding: Test with prototypes

- Check constraints: Analyze policy documents

- Verify completeness: Walk through detailed scenarios

Red flags to watch for:

- Only talking to managers (they don't do the actual work)

- Leading questions (you'll hear what you want to hear)

- Single method (each method has blind spots)

- Missing stakeholders (the quiet ones often have critical needs)

Key Takeaways

- Requirements analysis bridges vague needs to concrete specifications

- Three risk dimensions: Understanding, Scope, and Volatility

- Stakeholder analysis identifies everyone affected, including hidden stakeholders

- Multiple elicitation methods needed—each has blind spots

- Participatory approach: Engage users as design partners, not just requirement sources

The key insight: Requirements analysis isn't asking "what do you want?"—it's understanding problems deeply enough to design solutions stakeholders didn't know were possible.

Bonus Slide

Source: @_yes_but